Difference between revisions of "A computable framework for accountable data assets"

Shaohai.guo (talk | contribs) (→TBD: Grammar and Typos) |

|||

| (127 intermediate revisions by 4 users not shown) | |||

| Line 1: | Line 1: | ||

=Synopsis= | =Synopsis= | ||

This article argues | This article argues that complex web services can '''always''' be composed of simple data types, such as [[key-value pair]]s. By methodically utilizing the '''[[universal]]'''<ref>{{:Video/Toposes - "Nice Places to Do Math"}}</ref><ref>{{:Video/Motivation for a Definition of a Topos}}</ref> properties of [[key-value pair]]s, it will significantly reduce the cost and development effort of continuously more functionally-rich web-based services. This systematic approach improves the following three areas of web service development and operations: | ||

# | # [[Universal Abstraction]] ([[Sound and Complete]]): Representing domain-neutral knowledge content in a [[key-value pair]]-based [[programming model]] ([[functional and declarative model]]) that allows flexible composition of data assets to create new instances of data assets- such as web [[page]]s, digital [[file]]s, and web [[service]]s. | ||

# [[Accountability]] ([[Terminable]] responsibility tracing): Enforcing a formalized web service maintenance workflow by assigning accountability of changes to three account types. The accountability of content changes goes with [[Externally Owned Account]]s. The business logic of workflows are specified in formalized workflow description languages. Each version of the workflow specification is uniquely bound to a [[Contract Account]]. Every initiation of a workflow is reflected in the creation of a [[Project Account]], which captures all relevant information content related to the execution effects of the workflow. The definition of [[Project Account]] extends the technical specification of [[Smart Contract]] as specified in the design of [[Ethereum]].<ref>{{:Paper/Ethereum: A Next-Generation Smart Contract and Decentralized Application Platform}}</ref><ref>{{:Paper/ETHEREUM: A SECURE DECENTRALISED GENERALISED TRANSACTION LEDGER}}</ref><ref>{{:Book/Political Numeracy}}</ref> | |||

# [[Self-documenting]] ([[Semantic precision]]<ref>{{:Video/Kris Brown: Combinatorial Representation of Scientific Knowledge}}</ref>): [[PKC]] is a documentation-driven [[DevOps]] practice. It integrates human-readable documents using [[MediaWiki]]'s [[hyperlink]]s and [[mw:Manual:Special_pages|Special pages]] to represent the knowledge or state of the microservice at work. [[Self-documenting]] is achieved by relating [[Semantic MediaWiki|semantic labeling technology]] to industry-standard [[Application Programming Interface]]s([[API]]s) of [[Git]] and [[Docker]]/[[Kubernetes]] to reflect and report the status and historical trail of the [[PKC]] system at work. The real-time aspect of this software architecture is accomplished by managing timestamp information of the system using [[blockchain]]-based public timing services so that data content changes will be recorded by globally shared clocks. | |||

The | The novelty of this [[key-value pair]]-centric approach to data asset management is achieving the simplest possible system representation without oversimplification <ref>{{Blockquote|text=Everything Should Be Made as Simple as Possible, But Not Simpler.|sign=[[Albert Einstein]]|source='[https://quoteinvestigator.com/2011/05/13/einstein-simple/ Quote investigator]}}</ref>. It allows arbitrary functions, data content, and machine-processable causal relations to be represented uniformly in terms of [[key-value pair]]s. This [[key-value pair]] data primitive can also be used as a generic measuring metric to enable [[sound]], [[precise]], and [[terminable]] framework for modeling complex web services. | ||

=Background and Introduction= | =Background and Introduction= | ||

This article shows that the complexity of web service [[development and maintenance operation]]s can be significantly reduced by adopting a different data modeling | This article shows that the complexity of web service [[development and maintenance operation]]s can be significantly reduced by adopting a different data modeling mindset. The novelty of this approach is by manipulating data assets in a concrete data type ([[key-value pair]]) while abstractly treating them as algebraic entities,<ref name=AoS>{{:Paper/Algebra of Systems}}</ref><ref name=AOIS>{{:Thesis/The Algebra of Open and Interconnected Systems}}</ref> so that system complexity and data integrity concerns can be always reasoned through a set of [[computable|computable/decidable]] operations.<ref>{{:Video/The Man Who Revolutionized Computer Science With Math}}</ref><ref>{{:Book/Specifying Systems}}</ref> To deal with growth in functionality and data volume methodically, the accountability of system changes is delegated to three types of accounts: [[Externally Owned Account|Externally Owned Accounts]], [[Contract Account|Contract Accounts]], and [[Project Account|Project Accounts]]. Respectively, these three account types map accountability to human participants, behavioral specification, and project-specific operational anecdotal evidence. In other words, this data management framework represents system evolution possibilities in exact terms of human identities, version-controlled source code, project-specific execution traces, and nothing else, therefore using technical means to guarantee [[non-repudiation]], [[transparency]], and [[context awareness]]. Finally, this article is written using a proto-typical data management tool, namely the [[PKC]] web service package, so that as readers browse this article using the [[PKC]] web service, the readers and potential editors will directly experience the algebraic properties and data-centric nature being realized in an operational tool that can derive data-driven improvements in a self-referential way. | ||

=Universality: an Axiomatic Assumption in Data Science= | =Universality: an Axiomatic Assumption in Data Science= | ||

It is necessary to axiomatically assume that all information content can be approximately represented using a finite set of pre-defined symbols. The [[universe]] of symbols simply means the complete collection of all admissible symbols. The notion of logical [[universality]]<ref>{{:Book/Discrete Mathematics with Applications}}</ref> or rules that exhaustively apply to all admissible symbols in the symbol '''universe''' is the intellectual foundation of logical [[proof]]s, | It is necessary to axiomatically assume that all information content can be approximately represented using a finite set of pre-defined symbols. The [[universe]] of symbols simply means the complete collection of all admissible symbols. The notion of logical [[universality]]<ref>{{:Book/Discrete Mathematics with Applications}}</ref>, or rules that exhaustively apply to all admissible symbols in the symbol '''universe''', is the intellectual foundation of logical [[proof]]s, therefore providing the scientific foundation of data integrity. Without this integrity assumption, data cannot have rigorous meaning. | ||

In this article, we will treat [[key-value pair]]s as the [[universal component]] to serve as the unifying data and function representation device, so that we can reduce the learning curve and system maintenance complexity. Based on the axiomatic assumption, it is well known that a special kind of [[key-value pair]] | In this article, we will treat [[key-value pair]]s as the [[universal component]] to serve as the unifying data and function representation device, so that we can reduce the learning curve and system maintenance complexity. Based on the axiomatic assumption, it is well known that a special kind of [[key-value pair]], also known as the [[Lambda calculus]] (a.k.a. [[S-expression]]) may approximate any computational tasks. | ||

==Lambda Calculus: A recursive data structures that can represent all decision procedures== | ==Lambda Calculus: A recursive data structures that can represent all decision procedures== | ||

According to the universality assumption, all finite-length decision procedures can be represented as | According to the universality assumption, all finite-length decision procedures can be represented as [[Lambda Calculus|Lambda calculus]]<ref>To understand the intricate mechanisms of [[Lambda calculus]], and why and how this simple language can be universal, please read this page:[[Dana Scott on Lambda Calculus]].</ref><ref>{{:Paper/Outline of a Mathematical Theory of Computation}}</ref> programs. We know this statement is true because [[Lambda calculus]] is known to be '''Turing complete''',<ref>{{:Paper/mov is Turing-complete}}</ref> meaning that it can model all possible computing/decision procedures. More technically, '''Turing completeness''' reveals the following insight: | ||

All decision procedures can be recursively mapped onto a nested structure of switching(If-Then-Else) statements. | All decision procedures can be recursively mapped onto a nested structure of switching (If-Then-Else) statements. | ||

To test this idea, one may observe that [[Lambda calculus]] is a three-branch switching statement that represents three types (<math>\alpha</math>, <math>\beta</math>, and <math>\eta</math>) of computational abstractions. We consider each type of the abstractions computational, because the variable values and expressions' interpretation results are to be determined dynamically. | To test this idea, one may observe that [[Lambda calculus]] is a three-branch switching statement that represents three types (<math>\alpha</math>, <math>\beta</math>, and <math>\eta</math>) of computational abstractions. We consider each type of the abstractions computational, because the variable values and expressions' interpretation results are to be determined dynamically. | ||

{{:Table/Lambda Calculus abstractions}} | {{:Table/Lambda Calculus abstractions}} | ||

As shown in the table above, all three admissible data types can be | As shown in the table above, all three admissible data types can be symbolically represented as textual expressions occasionally annotated by dedicated symbols, such as <code>λ</code>. In any case, all three data types are admissible forms of functional expressions. In compiler literature, this representational form of functions is called an [[S-expression]], short for [[symbolic expression]]. It is well-established that an [[S-expression]] (often denoted in [[Backus-Naur form]]) can be used to represent any computing procedure and can also encode any digitized data content. To maximize representational efficiency, different kinds of data content should be encoded using different formats. Based on the [[universality]] assumption, all data content can all be thought of as sequentially composed symbols. | ||

==Decision Procedure represented in a Switch Statement== | ==Decision Procedure represented in a Switch Statement== | ||

To illustrate that all decision procedures can be represented | To illustrate that all decision procedures can be represented by nothing but [[key-value pair]]s, we first start with the notion of control structure in terms of '''switching''' statements. A switching statement is simply a lookup table. Once given a certain value, it will '''switch''' to a defined procedure labeled with the matched value. | ||

Using the built-in magic word of [[MediaWiki]], the code and the MediaWiki displayed result can be shown in the following table: | Using the built-in magic word<ref>This article: [[mw:Lua/Scripting#Deep_dive_on_Lua#Why Lua Scripting|Why Lua Scripting]], explains how to turn wikitext into a functional programming language using <code>#swithc</code> and <code>#if</code> magic words.</ref> of [[MediaWiki]], the code and the MediaWiki displayed result can be shown in the following table: | ||

{| class="wikitable" | {| class="wikitable" | ||

| Line 33: | Line 33: | ||

|- | |- | ||

| | | | ||

{{#invoke:CodeWrapper| returnDecodedText |{{:Demo:SwitchStatement7}} }} | {{#invoke:CodeWrapper|returnDecodedText|{{:Demo:SwitchStatement7}} }} | ||

|| | || | ||

{{#invoke:CodeWrapper| unstrip | {{:Demo:SwitchStatement7}} }} | {{#invoke:CodeWrapper| unstrip | {{:Demo:SwitchStatement7}} }} | ||

| Line 41: | Line 41: | ||

==The If-Then Control Structures as the minimal switch statement== | ==The If-Then Control Structures as the minimal switch statement== | ||

Given the examples above, it should be obvious that the possible behavior of a switching statement can only have a total of 6 outputs, since the admissible cases has a total of 6 alternative branches (in this case, 3, 4, 5 are considered to be one branch). In other words, <code>#switch</code> is a generalized function that | Given the examples above, it should be obvious that the possible behavior of a switching statement can only have a total of 6 outputs, since the admissible cases has a total of 6 alternative branches (in this case, 3, 4, 5 are considered to be one branch). In other words, a <code>#switch</code> is a generalized function that allows programmers to define an arbitrary number of branches. In contrast, the <code>[[mw:Help:Extension:ParserFunctions##ifexpr|#ifexpr]]</code> function is a hard-wired branching statement with exactly two possible branches, where two branches are the minimal number required to be a switch statement. Since there are only two options, the relative sequential positions of the two branches become the implicit keys (position indices). For the function <code>#ifexpr</code>, the first branch is selected if the input expression evaluates to <code>1</code> for being true, and the second branch, <code>0</code> for being false. | ||

The first '''If''' example: | The first '''If''' example: | ||

| Line 66: | Line 66: | ||

{{#invoke:CodeWrapper| unstrip |{{:Demo:IfExpr2}} }} | {{#invoke:CodeWrapper| unstrip |{{:Demo:IfExpr2}} }} | ||

|} | |} | ||

This pair of <code>#ifexpr</code> examples shown above | This pair of <code>#ifexpr</code> examples shown above demonstrate the notion of selecting execution paths. The first example shows that not only the expression <code>3<5</code> is evaluated to be false, it also chose to rewrite the string, from the original form:<code><nowiki>This expression is {{#expr: 0=0}}</nowiki></code> to <code>This expression is {{#expr: 0=0}}</code>. This demonstration reveals the basic behavior ofthe expression rewrite process, which is how [[Lambda calculus]] works. (For interested readers,examples of transcluding these code samples and determine how and which one of the transcluded code can be found on page [[Demo:CodeWrapper]].) | ||

==Lambda Calculus as a three branch recursive switching statement== | ==Lambda Calculus as a three branch recursive switching statement== | ||

| Line 79: | Line 79: | ||

===Example: Wiki code as annotated Lambda expression=== | ===Example: Wiki code as annotated Lambda expression=== | ||

[[Lambda calculus]] expression: <code><nowiki><λexp></nowiki></code> can be encoded in MediaWiki as <code><nowiki>{{#expr: computable expression}}</nowiki></code>. In this wiki's syntactical structure, <code>#expr:</code> is equivalent to the marker:<code>λ</code> in [[Lambda calculus]]. In other words, the entire wiki page that you are reading and editing is effectively an annotated [[Lambda calculus]] expression. Whenever a segment of the text shows the pattern of <code><nowiki>{{#expr: computable expression}}</nowiki></code>, the string rewrite system on the web server will start interpreting and following the switching structure denoted by the changeable content of <code><nowiki><var></nowiki></code> and <code><nowiki><λexp></nowiki></code>. The possible values of these three types of <code><nowiki><λexp></nowiki></code> can be as large as one's database can hold. This is basically where database technologies and [[PKC]] | [[Lambda calculus]] expression: <code><nowiki><λexp></nowiki></code> can be encoded in MediaWiki as <code><nowiki>{{#expr: computable expression}}</nowiki></code>. In this wiki's syntactical structure, <code>#expr:</code> is equivalent to the marker:<code>λ</code> in [[Lambda calculus]]. In other words, the entire wiki page that you are reading and editing is effectively an annotated [[Lambda calculus]] expression. Whenever a segment of the text shows the pattern of <code><nowiki>{{#expr: computable expression}}</nowiki></code>, the string rewrite system on the web server will start interpreting and following the switching structure denoted by the changeable content of <code><nowiki><var></nowiki></code> and <code><nowiki><λexp></nowiki></code>. The possible values of these three types of <code><nowiki><λexp></nowiki></code> can be as large as one's database can hold. This is basically where database technologies and [[PKC]] play a role. | ||

= | =Key-value pairs for composing web-based computational services= | ||

Knowing that all decision procedures are composed of switching statements, one may apply this simple principle to the composition of web services. The following table should help relate concepts developed in [[Lambda calculus]] to web services: | Knowing that all decision procedures are composed of switching statements, one may apply this simple principle to the composition of web services. The following table should help relate concepts developed in [[Lambda calculus]] to web services: | ||

{{:Table/Lambda calculus and Web Services}} | {{:Table/Lambda calculus and Web Services}} | ||

==Managing Functions as Catalogs of Names== | ==Managing Functions as Catalogs of Names in Adequate Cycle Times== | ||

When mapping structural information of an arbitrary system to a [[set]], a [[morphism]] (a generalized kind of [[function]]) that conducts this mapping is called a [[representable functor]]. This mapping can also be represented using [[S-expression]], and syntactically, it is denoted as a pair of data | When mapping structural information of an arbitrary system to a [[set]], a [[morphism]] (a generalized kind of [[function]]) that conducts this mapping is called a [[representable functor]]. This mapping can also be represented using an [[S-expression]], and syntactically, it is denoted as a pair of data elements called a [[key-value pair]]. In the web-based environment, every [[hyperlink]] is a [[key–value pair]], where the '''key''' represents a [[Universal Resource Locator]] ([[URL]]) string; the '''value''' is the page or data element referenced by the [[URL]]. A collection of [[key-value pair]]s can be considered as a dictionary of [[hashtable|hashtables]], where keys are unique values of the hashes. Once everything is represented as a [[URL]], it will have a shelf life, meaning that its validity will change over time. Managing every URL in terms of its time-based properties will be the main challenge to recognize the integrity of name spaces. | ||

=Data Integrity Concerns and Accountability(TBD)= | ==Security through Accountability== | ||

This section will | Systems are composed of imperfect subsystems. Therefore, it is impossible to guarantee the absolute security and integrity of a system. The integrity and security of a system can only be guaranteed through traceable accountability. By relegating all data changes with adequate [[account]]s, then, all systems will have the accounts to assign accountability. | ||

==System Observability with automated testing== | |||

To help the largest number of users to be involved with the system, the strategy is revealing the data by the most egalitarian kind of user interface. In this case, we choose the web browser as the common interface for human and machines. It is possible to use tools such as [[Selenium]] or [[Quant-UX]] to automatically act as users to test the overall system. This will close the loop in terms of having data to drive the behavior of testing and get results of the data service system. | |||

=Data Integrity Concerns and Accountability (TBD)= | |||

This section will detail the implementation of [[PKC]] and its software engineering-related concerns. | |||

==Representational Closure== | ==Representational Closure== | ||

{{:Table/Representable Closure}} | {{:Table/Representable Closure}} | ||

==Extensibility, Scalability, and Learnability== | ==Extensibility, Scalability, and Learnability== | ||

Based on results presented in [[Algebra of Systems]] | Based on results presented in the [[Algebra of Systems]], this computational framework specifies an algebraically-formulated accounting system for transacting data assets on the web. Operationally, this article defines the data capture and data verification procedure in terms of the above-mentioned [[data asset classes]] so that it can leverage the mathematical rigor to reason about data integrity. Moreover, this article prescribes an implementation roadmap to construct an [[open source]] and [[self-owned]] [[cloud computing]] (network-based data processing) service utilizing a decentralized security system, so that small and large organizations can utilize the same data processing infrastructure to conduct business activities. This will significantly reduce the cost and accelerate business transaction cycles, therefore enabling more people to utilize the technical potential of the supply network of data, products, and services on the Internet. Most importantly, it will enable a much larger crowd to utilize data processing technologies, such as [[cloud computing]] services, without having to become a full-stack software developer, but by browsing through catalogs of [[PKC]]-packaged publicly tested data assets. | ||

==Decision-making agents represented as Accounts== | ==Decision-making agents represented as Accounts== | ||

[[Account]] is a type of data structure that defines conditional rights based on ownership. This can be accomplished technically using cryptographically guaranteed algorithms. Inspired by [[Ethereum]], for the right to assign ownership to resources, only two kinds of accounts are possible: | [[Account]] is a type of data structure that defines conditional rights based on ownership. This can be accomplished technically using cryptographically guaranteed algorithms. Inspired by [[Ethereum]], for the right to assign ownership to resources, only two kinds of accounts are possible: | ||

# [[Externally Owned Account]]: This class of accounts are controlled by agents or agencies that must authenticate their identity and they can exercise their rights via an access control list. | # [[Externally Owned Account]]: This class of accounts are controlled by agents or agencies that must authenticate their identity and they can exercise their rights via an access control list. | ||

# [[Programmable Account]](a.k.a. [[Contract Account]]): This class of accounts are controlled by a set of source code that are published and executed based on a code base | # [[Programmable Account]] (a.k.a. [[Contract Account]]): This class of accounts are controlled by a set of source code that are published and executed based on a code base who controls the [[Externally Owned Account]], which is implicitly trusted by all participants. . | ||

==Broadest Possible User Base== | ==Broadest Possible User Base== | ||

This framework should provide intuitive user interfaces for entry-level users through [[popular web browsers|popularly-available]] [[web browser]]-based interfaces | This framework should provide intuitive user interfaces for entry-level users through [[popular web browsers|popularly-available]] [[web browser]]-based interfaces via features offered freely on the Internet, and create an open source turn-key solution that allows almost everyone on the web a [[self-sovereign]] [[cloud computing]] service. This revolutionary software artifact presents many business opportunities and inspires many new technologies, however methods and tools to ensure their system integrity have not yet caught up with these changes. Complex software applications and business processes that have been serving a large portion of society are searching for systematic ways to migrate to modern technical infrastructures. | ||

=Deployment and Interoperability of Accountable Data= | |||

=Deployment and Interoperability | |||

According to [[Book/Algebraic Models for Accounting Systems|Rambaud and Pérez]]<ref>{{:Book/Algebraic Models for Accounting Systems}}</ref><ref>{{:Paper/The Accounting System as an Algebraic Automaton}}</ref>, an algebraically-defined accounting (data capture and verification) practice may systematically automate the decision procedures for the following activities: | According to [[Book/Algebraic Models for Accounting Systems|Rambaud and Pérez]]<ref>{{:Book/Algebraic Models for Accounting Systems}}</ref><ref>{{:Paper/The Accounting System as an Algebraic Automaton}}</ref>, an algebraically-defined accounting (data capture and verification) practice may systematically automate the decision procedures for the following activities: | ||

# Decide how to classify the data collected and send the collected data to relevant data processing workflows. | # Decide how to classify the data collected and send the collected data to relevant data processing workflows. | ||

| Line 113: | Line 116: | ||

==Deployment Process== | ==Deployment Process== | ||

==Physical Meaning of Data== | A pragmatic way of deploying accountable data assets is leveraging the complementary immutable features of past history, the unpredictable nature of future events, and the duality or even three-layered aspects of computation (the connections between Proof, Program Execution, and Grammar) to verify and validate the correctness of data content based on a common blockchain, which assigns a singular ordering sequence property to all data elements. The plan is to use a generalized workflow to iteratively refine and optimize the content structure of data assets in a networked environment that assigns sociophysical meaning to data. | ||

===Controlling the Logic of Deployment using Automated Tests=== | |||

Unlike traditional testing practices, the controlling gate is based on a senior developers' reputation, or a central administration's authority, to approve test results to deploy a piece of code to the public. In this proposed framework, data assets, source code, and test cases are considered to be named data assets to be nominated or pledged by supporting parties by their own account identities. All accounts, whether it is an [[Externally Owned Account]], or [[Programmable Account]], can sign off on a data content release to a particular stage of qualification based on a [[Ricardian Contract]]. This is a direct application of using the well-known [[Smart Contract]] infrastructure to control software production workflow, or content release workflow, at a much larger scale. Clearly, the total number of participants to release any given piece of data can be controlled by the [[Smart Contract]], which could be bound to certain well-defined versioning systems to make the [[Smart Contract]] a [[Ricardian Contract]]. | |||

===Lambda Calculus and Curry-Howard-Lambek Correspondence=== | |||

The second layer of this framework is integrating test cases and test case execution using the well-known mathematical observation called the [[Curry-Howard-Lambek Correspondence]]. This allows one to see that certain pieces of data would be submitted through predefined execution procedures, which are pieces of data that have a timestamp associated with them, and their processing of certain input data content would be transformed into certain output data content that signifies their '''judgments''' on the input data. By recording the executing trail of input data with their processing results of the test procedures, this trail could constitute a form of automated verification or validation of the system. | |||

====Hermeneutical Circle and Data Configurations==== | |||

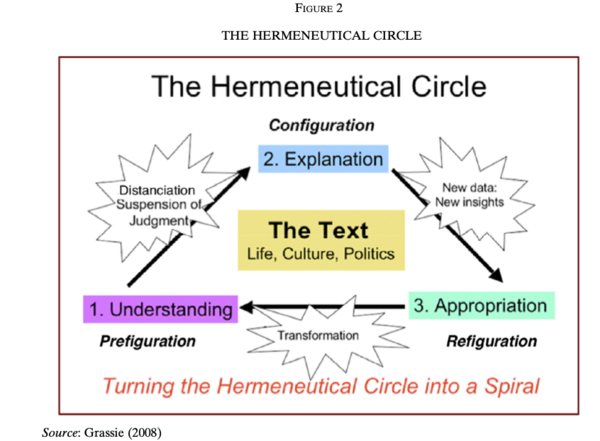

Think of software or a data content product between a configuration management exercise. Then, the notion of [[Hermeneutics]] (this term refers to the theory and methodology of text interpretation, originally the interpretation of biblical texts, wisdom literature, and philosophical texts, but it can definitely to applied to data interpretation.), can be applied here (The following diagram is extracted from [[Paper/The Life and Works of Luca Pacioli|The Life and Works of Luca Pacioli]] ):<ref>{{:Paper/The Life and Works of Luca Pacioli}}, Figure 2</ref> | |||

[[File:HermeneuticalCircle.png|600px|thumb|center]]: | |||

In the Hermeneutical diagram: | |||

# Configuration refers to the data content to be exposed to the public. | |||

# Refiguration refers to data content that is being challenged and modified. | |||

# Prefiguration refers to data content that is being proposed and will be processed through existing test procedures. | |||

These three distinctive phases can be managed using [[configuration management]] tools, such as [[Git]] or [[Fossil]], as a version control database to keep the cycles of [[Hermeneutics|Hermeneutic]] evolutions moving forward in a controlled manner. | |||

===Proof, Program Execution, and Grammar=== | |||

Following the assumptions in the [[Curry-Howard-Lambek Correspondence]], the notions of Proof, Program Execution, and Grammar are three aspects of a unifying system. These aspects of data content knowledge would only be "proven" if they are represented in their respective domain. The convenient fact is that these three data structures could be explicitly defined and be given appropriate abstractions within their domains. The work of managing software products can follow a compact universal data classification scheme and keep using the tools and methods provided by the three domains to prove, execute, and define the structural nature of every data element. | |||

==Physical, Social and Operationalized Meaning of Data== | |||

Once we have a generalized theoretical framework to manage abstract data, we need a concrete platform to assign physical meaning to data. In this case, we want to assign timestamps derived from a common blockchain to data packages. This will provide a consistent mechanism to note the latest modification time. This order-asserting property will help distinguish the structure of data dependency based on the temporal ordering, as well as the source of changes in terms of account addresses ([[Externally Owned Account|Externally Owned Accounts]] or [[Programmable Account|Programmable Accounts]]) mentioned before. In other words, this framework assigns physical meaning to data through timestamps, and social meaning to data through account addresses. Therefore, data assets and data content will naturally be associated with adequate accountability. | |||

===Operationalized Meaning of Data=== | |||

Data can be manipulated with a large number of heterogeneous computers operating in various locations and configurations. This can have significant performance and data accuracy/consistency implications. From a [[PKC]] point of view, individual consumers of data content should not be burdened with sophisticated technologies and massive energy consumption/data storage requirements, thus leading to thinking of how data can be compressed and refreshed with adequate economic concerns as a built-in function, hidden away from everyone's usage. After the innovation of [[Docker]]-like container virtualization technologies, it became possible to operationalize data manipulation across a large number of computing architectures and configurations with a configuration consistency. This minimizes the discrepancies of data manipulation by assuming all virtualized computers will not distort data content and functional correctness. Moreover, a mechanism, such as [[Fossil]], which uses a relational data model ([[SQLite]] as a data repository) to manage files and data version history, can be a universal model to manage data in a recursively distributed manner. | |||

==Interoperability of [[PKC]]== | ==Interoperability of [[PKC]]== | ||

Given that all data content can be associated with physical, social, and operationalized meanings in a rigorous way, grounded in lambda calculus, it is conceivable that the composability of data can be attained in a computational framework that associates humans, human agencies, and technically verified procedures/data content. This would be a useful framework to reduce uncertainty in data management, therefore enhancing the exchangeability of data and source code. More importantly, every [[PKC]] can be associated with these data management tools and infrastructures. Knowing each [[PKC]] instance qualifies or signifies a specific version of the software and/or knowledge content, thus, information consistency can be reached with minimal third party intervention. Effectively, [[PKC]] can be used as the domain-neutral data management operating system that keeps data content consistent without leaking information beyond the system-prescribed perimeters. | |||

===Open Format over Open Source=== | |||

Contrary to common belief, [[Open Format]] is more important than [[Open Source]], since source code can be a major burden to be parsed, understood, and tested for validity. However, formats of information are by definition explicit and need to be open enough to be easily understood and to encourage interchange. Therefore, a [[key-value pair]]-based format could be a foundational design building block to support the [[Open Format]] idea. This requires a thorough and rigorous adoption of [[Open API Specification]], as well as the [[JSON]]/[[YAML]] [[key-value pair]]-based data format and declarative syntax for specifying data assets across [[File]], [[Page]], and [[Service]] data domains. | |||

===The three objectives at G20 2022=== | |||

To enable Inter-organizational workflow or data exchanges, according to [[Luhut Panjaitan]], the [[wikipedia:Coordinating Ministry for Maritime and Investments Affairs|Coordinating Ministry for Maritime and Investments Affairs]] of [[Indonesia]], also the hosting nation for the [[wikipedia:G20|G20]] in 2022, the [[Science of Governance]] should be spread through self-administered Data as prescribed in the following steps: | |||

# The creation of a data storage and manipulation instrument ([[PKC]]) to enable a personalized and scalable containment of data assets. | |||

# The creation of publicly available training programs and publishable curriculum to promote the understanding of governance through data. | |||

# Invite and work with global industry standards bodies to set adequate and fair data standards for all national participants. | |||

Only with non-proprietary data asset management instrumentation, open and public educational programs on the value of data, and industry-wide data exchange protocols to be open for public examinations, can one really anticipate a continuous flow of data assets to operate with minimal friction. Otherwise, the world would continue to see chaos not only due to friction, but information asymmetry as well. | |||

=Conclusion= | =Conclusion= | ||

This article proposes a system composition/decomposition strategy with an algebraic programming model. It also presented a sample implementation, namely [[PKC]] as a self-sufficient building block of an inter-organizational data transaction system. It also borrowed the | This article proposes a system composition/decomposition strategy with an algebraic programming model. It also presented a sample implementation, namely [[PKC]] as a self-sufficient building block of an inter-organizational data transaction system. It also borrowed the concept of "[[accounting]] practice" and its formal mathematical framework to ensure accountability and data consistency. The proposed framework differs from existing [[blockchain]]s or [[Web3]] systems in the following ways: | ||

# | # It is a [[hyperlink|hyperlinked]] data asset management framework that uses [[key-value pair]]s to link content data, source code, and executable binary data images in one consistently abstracted workflow. The notion of [[key-value pair]]s is also the building block of [[Lambda calculus]], which provides a model of functional composition and can be applied to represent workflows. This workflow model allows anyone to reuse the content knowledge, source code, and operational experience of the [[PKC]] community. It allow organizations of any size to operate their own data asset management infrastructure using a chosen branch of this open sourced framework of data asset management. | ||

# | # The framework uses a data-driven (declarative) programming model that integrates content, executable functions, and networked data services as nothing but [[key-value pair]]s, so that it will simply grow and refine its own logical integrity as more [[key-value pair]]s are being accumulated. In other words, [[PKC]] is a scale-free and domain-neutral learning system that will naturally evolve its own structure and content as these [[key-value pair]]s are being added to its data asset repository. Both good and bad results can be transparently reused by all other parties. | ||

# | # It uses web-browser oriented data abstraction that presents all data assets in terms of [[page]] abstraction, so a universal namespace and data presentation mechanism covers all usage scenarios while remaining compatible to other universal data abstractions, such as [[file]] and [[service]] abstractions. This [[page]], [[file]], and [[service]] abstraction combo is defined and programmed into the web using the [[key-value pair]] programming model, and therefore offers the maximum reach in terms of participants and data consumption parties. | ||

==TBD== | ==TBD== | ||

Since the appearance of [[World Wide Web]] in 1995, world affairs have been transformed by ever-faster electronic data transaction activities. This data-driven phenomenon created an unprecedented global supply network that can be considered as a singular inter-connected web of data transaction activities. Up to year 2022<ref>This document is revised on {{REVISIONMONTH1}} {{REVISIONDAY}}, {{REVISIONYEAR}}</ref>, this data-driven supply network favors organizations or | Since the appearance of the [[World Wide Web]] in 1995, world affairs have been transformed by ever-faster electronic data transaction activities. This data-driven phenomenon created an unprecedented global supply network that can be considered as a singular inter-connected web of data transaction activities. Up to year the 2022<ref>This document is revised on {{REVISIONMONTH1}} {{REVISIONDAY}}, {{REVISIONYEAR}}</ref>, this data-driven supply network favors organizations or people who have deep pockets and access to more advanced Information Technologies. The competitive edge distinguished by wealth and technology literacy induced many unfair practices and even unethical and/or illegal transaction activities at a global scale. To resolve this issue, this article presents [[Personal Knowledge Container]] ([[PKC]]) as a [[self-administered]] [[cloud computing]] service which reduces the unfair competitive edges and reduces the cost of system participation or system operation that would be necessary to address many fundamental issues caused by [[information asymmetry]]. | ||

Recent | Recent developments in [[blockchain]] and [[Decentralized identifier]] technologies coupled with web-based applications and 4G/5G connected devices created a technical infrastructure that could significantly reduce the degree of unfairness/[[information asymmetry]] in the global marketplace. Anyone with access to an Internet-connected web-browsing device have been able to not just participate in the global supply network, but also learn and operate their own business with minimal entry barrier. To continuously introduce late-breaking Information Technologies to the broadest possible range of users, the world needs to present a user experience through [[popular web browsers|popularly-available]] [[web browser]]s that will present a wide range of data formats, including natural language annotations and timely workflow instructions. Most most importantly, the world needs to have a "fair" data security model that protects the interests of all participants in a transparent<ref>Transparency of security rules can be encoded in published [[Smart contract]]s, so that participants can decide to participate or not based on reading the explicitly specified contracts.</ref> way. | ||

==How does this framework differs from existing approaches== | ==Why and How does this framework differs from existing approaches== | ||

Existing web application frameworks are often developed and operated by highly skilled software development and operational teams that serves a specific set of profit attaining objectives. Each instance of web service will have a highly localized and protected set of operational data. This operational data, and software configuration knowledge is a piece of privately owned asset that is usually protected and not shared to the public. In contact, [[PKC]] differs from existing data transaction systems, often known as [[Infrastructure as Code]]([[IaC]]) in the following way: | Existing web application frameworks are often developed and operated by highly skilled software development and operational teams that serves a specific set of profit attaining objectives. Each instance of web service will have a highly localized and protected set of operational data. This operational data, and software configuration knowledge is a piece of privately owned asset that is usually protected and not shared to the public. In contact, [[PKC]] differs from existing data transaction systems, often known as [[Infrastructure as Code]]([[IaC]]) in the following way: | ||

===Most people simply cannot believe it can be this simple=== | |||

[[Key-value pair]]s, or [[hyperlink|hyperlinked]] data content is the simplest, yet universal data type that connects our world and minds. When this universal instrument is made explicit and integrated with self-documented technical arguments to continuously explore and explain the opportunities for improvement, this data management management framework, and its derived data management tools, such as [[PKC]] can continuously improve its system correctness while accelerating all activities supported by [[PKC]]. | |||

===Simplicity enables massive and decentralized/distributed adoption, and generate trust-worthy data=== | |||

Because [[PKC]] is super simple, so that it is possible for everyone to own and to operate their own instance of [[PKC]], therefore creating a larger base of egalitarian data processing and data verification/authentication/authorization agencies. Giving data a much more distributed/decentralized trust-worthiness (it is witnessed by more independent agents and agents, so that it is more trust worthy.) | |||

===Trust-worthiness allows PKC-managed data asset to be used for error-correction=== | |||

Given the trust-worthiness of data, the data can be used to correct mistakes in content, source code, and binary executable images, so that it becomes a platform of [[DevSecOps]] workflow. | |||

===Self-reflective error correction enables systematic learning=== | |||

When [[PKC]] can be deployed to a broad base of practices, it will enable a kind of self-reflective error correction feature, where many different kinds of applications and use cases can mutually verify and validate the quality of [[key-value pair]]-encoded knowledge base. This goes back to the mythical story of [[Tower of Babel]], where a unified language will enable participants to build a structure that can scale up to unprecedented height. | |||

# [[PKC]] as | # [[PKC]] as the e-Catalog of cloud-enabled data assets | ||

[[PKC]] is a general-purpose framework that uses an encyclopedic approach to categorize and publish all existing data resources in terms of data content, source code, executable binary, and real world software operational data. This publicized framework of data asset management approach allows all participants to operate their own instances of [[PKC]] by leveraging the operational experience of the entire [[PKC]] community. | [[PKC]] is a general-purpose framework that uses an encyclopedic approach to categorize and publish all existing data resources in terms of data content, source code, executable binary, and real world software operational data. This publicized framework of data asset management approach allows all participants to operate their own instances of [[PKC]] by leveraging the operational experience of the entire [[PKC]] community. | ||

#Automate the composition and decomposition of software components | #Automate the composition and decomposition of software components | ||

| Line 144: | Line 192: | ||

#Disseminate the most-recent-possible data that reflect verifiable truth | #Disseminate the most-recent-possible data that reflect verifiable truth | ||

#Published data as a public Natural Resource | #Published data as a public Natural Resource<ref>[[Slide/Fab City Full Stack]]</ref> | ||

<noinclude> | <noinclude> | ||

| Line 151: | Line 199: | ||

<references/> | <references/> | ||

=Related Pages= | =Related Pages= | ||

[[Content relating to::PKC]] | |||

[[Category:AoS]] | [[Category:AoS]] | ||

[[Category:If]] | |||

[[Category:Accounting]] | [[Category:Accounting]] | ||

</noinclude> | </noinclude> | ||

Latest revision as of 05:42, 7 December 2022

Synopsis

This article argues that complex web services can always be composed of simple data types, such as key-value pairs. By methodically utilizing the universal[1][2] properties of key-value pairs, it will significantly reduce the cost and development effort of continuously more functionally-rich web-based services. This systematic approach improves the following three areas of web service development and operations:

- Universal Abstraction (Sound and Complete): Representing domain-neutral knowledge content in a key-value pair-based programming model (functional and declarative model) that allows flexible composition of data assets to create new instances of data assets- such as web pages, digital files, and web services.

- Accountability (Terminable responsibility tracing): Enforcing a formalized web service maintenance workflow by assigning accountability of changes to three account types. The accountability of content changes goes with Externally Owned Accounts. The business logic of workflows are specified in formalized workflow description languages. Each version of the workflow specification is uniquely bound to a Contract Account. Every initiation of a workflow is reflected in the creation of a Project Account, which captures all relevant information content related to the execution effects of the workflow. The definition of Project Account extends the technical specification of Smart Contract as specified in the design of Ethereum.[3][4][5]

- Self-documenting (Semantic precision[6]): PKC is a documentation-driven DevOps practice. It integrates human-readable documents using MediaWiki's hyperlinks and Special pages to represent the knowledge or state of the microservice at work. Self-documenting is achieved by relating semantic labeling technology to industry-standard Application Programming Interfaces(APIs) of Git and Docker/Kubernetes to reflect and report the status and historical trail of the PKC system at work. The real-time aspect of this software architecture is accomplished by managing timestamp information of the system using blockchain-based public timing services so that data content changes will be recorded by globally shared clocks.

The novelty of this key-value pair-centric approach to data asset management is achieving the simplest possible system representation without oversimplification [7]. It allows arbitrary functions, data content, and machine-processable causal relations to be represented uniformly in terms of key-value pairs. This key-value pair data primitive can also be used as a generic measuring metric to enable sound, precise, and terminable framework for modeling complex web services.

Background and Introduction

This article shows that the complexity of web service development and maintenance operations can be significantly reduced by adopting a different data modeling mindset. The novelty of this approach is by manipulating data assets in a concrete data type (key-value pair) while abstractly treating them as algebraic entities,[8][9] so that system complexity and data integrity concerns can be always reasoned through a set of computable/decidable operations.[10][11] To deal with growth in functionality and data volume methodically, the accountability of system changes is delegated to three types of accounts: Externally Owned Accounts, Contract Accounts, and Project Accounts. Respectively, these three account types map accountability to human participants, behavioral specification, and project-specific operational anecdotal evidence. In other words, this data management framework represents system evolution possibilities in exact terms of human identities, version-controlled source code, project-specific execution traces, and nothing else, therefore using technical means to guarantee non-repudiation, transparency, and context awareness. Finally, this article is written using a proto-typical data management tool, namely the PKC web service package, so that as readers browse this article using the PKC web service, the readers and potential editors will directly experience the algebraic properties and data-centric nature being realized in an operational tool that can derive data-driven improvements in a self-referential way.

Universality: an Axiomatic Assumption in Data Science

It is necessary to axiomatically assume that all information content can be approximately represented using a finite set of pre-defined symbols. The universe of symbols simply means the complete collection of all admissible symbols. The notion of logical universality[12], or rules that exhaustively apply to all admissible symbols in the symbol universe, is the intellectual foundation of logical proofs, therefore providing the scientific foundation of data integrity. Without this integrity assumption, data cannot have rigorous meaning.

In this article, we will treat key-value pairs as the universal component to serve as the unifying data and function representation device, so that we can reduce the learning curve and system maintenance complexity. Based on the axiomatic assumption, it is well known that a special kind of key-value pair, also known as the Lambda calculus (a.k.a. S-expression) may approximate any computational tasks.

Lambda Calculus: A recursive data structures that can represent all decision procedures

According to the universality assumption, all finite-length decision procedures can be represented as Lambda calculus[13][14] programs. We know this statement is true because Lambda calculus is known to be Turing complete,[15] meaning that it can model all possible computing/decision procedures. More technically, Turing completeness reveals the following insight:

All decision procedures can be recursively mapped onto a nested structure of switching (If-Then-Else) statements.

To test this idea, one may observe that Lambda calculus is a three-branch switching statement that represents three types (, , and ) of computational abstractions. We consider each type of the abstractions computational, because the variable values and expressions' interpretation results are to be determined dynamically.

| Admissible data types | Symbolic representation | Description |

|---|---|---|

| Variable (-conversion) | x | A character or string representing a parameter or mathematical/logical value. |

| Substitution ( reduction) | (λx.M)(value to be bound to x) | This expression specifies how function is defined by replacing values of bound variable x in the lambda (λ) expression M. |

| Composition (-reduction) | (M N) | Specifying the sequential composition of multiple lambda expressions such as M and N. |

As shown in the table above, all three admissible data types can be symbolically represented as textual expressions occasionally annotated by dedicated symbols, such as λ. In any case, all three data types are admissible forms of functional expressions. In compiler literature, this representational form of functions is called an S-expression, short for symbolic expression. It is well-established that an S-expression (often denoted in Backus-Naur form) can be used to represent any computing procedure and can also encode any digitized data content. To maximize representational efficiency, different kinds of data content should be encoded using different formats. Based on the universality assumption, all data content can all be thought of as sequentially composed symbols.

Decision Procedure represented in a Switch Statement

To illustrate that all decision procedures can be represented by nothing but key-value pairs, we first start with the notion of control structure in terms of switching statements. A switching statement is simply a lookup table. Once given a certain value, it will switch to a defined procedure labeled with the matched value.

Using the built-in magic word[16] of MediaWiki, the code and the MediaWiki displayed result can be shown in the following table:

| Wiki Source Code | Rendered Result |

|---|---|

|

{{#switch: {{#expr: 3+2*1}} | 1 = one | 2 = two | 3|4|5 = any of 3–5 | 6 = six | 7 = {{uc:sEveN}} <!--lowercase--> | #default = other }} |

any of 3–5

|

Based on the example shown above, it should be evident that #switch as a function takes in an input expression {{#expr: 4+2+1}}, which should be evaluated to the numerical value 7, and the #switch function uses the following key-value pairs to find the matching key7, and return the assigned value.

The If-Then Control Structures as the minimal switch statement

Given the examples above, it should be obvious that the possible behavior of a switching statement can only have a total of 6 outputs, since the admissible cases has a total of 6 alternative branches (in this case, 3, 4, 5 are considered to be one branch). In other words, a #switch is a generalized function that allows programmers to define an arbitrary number of branches. In contrast, the #ifexpr function is a hard-wired branching statement with exactly two possible branches, where two branches are the minimal number required to be a switch statement. Since there are only two options, the relative sequential positions of the two branches become the implicit keys (position indices). For the function #ifexpr, the first branch is selected if the input expression evaluates to 1 for being true, and the second branch, 0 for being false.

The first If example:

| Wiki Source Code | Rendered Result |

|---|---|

|

'''Wrapper wikicode or text''' {{#ifexpr: 3<5 | This expression is {{#expr: 0=0}} | This expression is evaluated to {{#expr: 9>9}} }} '''Wrapper wikicode'''. |

Wrapper wikicode or text This expression is 1 Wrapper wikicode. |

The second If example:

| Wiki Source Code | Rendered Result |

|---|---|

|

{{#ifexpr: 7=3 | {{#expr: 3+2=5}} RESULT | some text representing {{#expr: 1<1}} result }} |

some text representing 0 result |

This pair of #ifexpr examples shown above demonstrate the notion of selecting execution paths. The first example shows that not only the expression 3<5 is evaluated to be false, it also chose to rewrite the string, from the original form:This expression is {{#expr: 0=0}} to This expression is 1. This demonstration reveals the basic behavior ofthe expression rewrite process, which is how Lambda calculus works. (For interested readers,examples of transcluding these code samples and determine how and which one of the transcluded code can be found on page Demo:CodeWrapper.)

Lambda Calculus as a three branch recursive switching statement

Given the general case of switching:(#switch) and the special case of switching:(#ifexpr), it can now be revealed that Lambda calculus is nothing but a three-branch switching structure:

<λexp> ::= <var> | λ <var> . <λexp>|( <λexp> <λexp> )

Seeing the Backus-Naur form implementation of Lambda calculus, it should be obvious that this Turing-complete language is completely implemented in key-value pairs. One may reflect on the argument presented so far:

Key-value pair is the foundational building block for constructing decision procedures (computational processes).

Example: Wiki code as annotated Lambda expression

Lambda calculus expression: <λexp> can be encoded in MediaWiki as {{#expr: computable expression}}. In this wiki's syntactical structure, #expr: is equivalent to the marker:λ in Lambda calculus. In other words, the entire wiki page that you are reading and editing is effectively an annotated Lambda calculus expression. Whenever a segment of the text shows the pattern of {{#expr: computable expression}}, the string rewrite system on the web server will start interpreting and following the switching structure denoted by the changeable content of <var> and <λexp>. The possible values of these three types of <λexp> can be as large as one's database can hold. This is basically where database technologies and PKC play a role.

Key-value pairs for composing web-based computational services

Knowing that all decision procedures are composed of switching statements, one may apply this simple principle to the composition of web services. The following table should help relate concepts developed in Lambda calculus to web services:

| Admissible data types | Symbolic representation | Description |

|---|---|---|

| Variable (-conversion) | x | A web page or a data artifact that can be observed and used directly by a web user. |

| Substitution (-reduction) | (λx.M)(value to be bound to x) | A template or executable function that can be reused and plugged-in by a defined range of values or data feeds. |

| Composition (-reduction) | (M N) | The sequential/structural arrangements of known computational resources. |

Managing Functions as Catalogs of Names in Adequate Cycle Times

When mapping structural information of an arbitrary system to a set, a morphism (a generalized kind of function) that conducts this mapping is called a representable functor. This mapping can also be represented using an S-expression, and syntactically, it is denoted as a pair of data elements called a key-value pair. In the web-based environment, every hyperlink is a key–value pair, where the key represents a Universal Resource Locator (URL) string; the value is the page or data element referenced by the URL. A collection of key-value pairs can be considered as a dictionary of hashtables, where keys are unique values of the hashes. Once everything is represented as a URL, it will have a shelf life, meaning that its validity will change over time. Managing every URL in terms of its time-based properties will be the main challenge to recognize the integrity of name spaces.

Security through Accountability

Systems are composed of imperfect subsystems. Therefore, it is impossible to guarantee the absolute security and integrity of a system. The integrity and security of a system can only be guaranteed through traceable accountability. By relegating all data changes with adequate accounts, then, all systems will have the accounts to assign accountability.

System Observability with automated testing

To help the largest number of users to be involved with the system, the strategy is revealing the data by the most egalitarian kind of user interface. In this case, we choose the web browser as the common interface for human and machines. It is possible to use tools such as Selenium or Quant-UX to automatically act as users to test the overall system. This will close the loop in terms of having data to drive the behavior of testing and get results of the data service system.

Data Integrity Concerns and Accountability (TBD)

This section will detail the implementation of PKC and its software engineering-related concerns.

Representational Closure

| Technical Term | Abstraction types | Symbolic representation | Description |

|---|---|---|---|

| -conversion | Variable | Naming abstraction | A collection of symbols (names) that act as unique identifiers. |

| -reduction | Substitution rule | Function evaluations | A template or executable function that can be reused in multiple contexts. |

| -composition | Sequential composition | Function composition | The sequential/structural arrangements of known representable data. |

Extensibility, Scalability, and Learnability

Based on results presented in the Algebra of Systems, this computational framework specifies an algebraically-formulated accounting system for transacting data assets on the web. Operationally, this article defines the data capture and data verification procedure in terms of the above-mentioned data asset classes so that it can leverage the mathematical rigor to reason about data integrity. Moreover, this article prescribes an implementation roadmap to construct an open source and self-owned cloud computing (network-based data processing) service utilizing a decentralized security system, so that small and large organizations can utilize the same data processing infrastructure to conduct business activities. This will significantly reduce the cost and accelerate business transaction cycles, therefore enabling more people to utilize the technical potential of the supply network of data, products, and services on the Internet. Most importantly, it will enable a much larger crowd to utilize data processing technologies, such as cloud computing services, without having to become a full-stack software developer, but by browsing through catalogs of PKC-packaged publicly tested data assets.

Decision-making agents represented as Accounts

Account is a type of data structure that defines conditional rights based on ownership. This can be accomplished technically using cryptographically guaranteed algorithms. Inspired by Ethereum, for the right to assign ownership to resources, only two kinds of accounts are possible:

- Externally Owned Account: This class of accounts are controlled by agents or agencies that must authenticate their identity and they can exercise their rights via an access control list.

- Programmable Account (a.k.a. Contract Account): This class of accounts are controlled by a set of source code that are published and executed based on a code base who controls the Externally Owned Account, which is implicitly trusted by all participants. .

Broadest Possible User Base

This framework should provide intuitive user interfaces for entry-level users through popularly-available web browser-based interfaces via features offered freely on the Internet, and create an open source turn-key solution that allows almost everyone on the web a self-sovereign cloud computing service. This revolutionary software artifact presents many business opportunities and inspires many new technologies, however methods and tools to ensure their system integrity have not yet caught up with these changes. Complex software applications and business processes that have been serving a large portion of society are searching for systematic ways to migrate to modern technical infrastructures.

Deployment and Interoperability of Accountable Data

According to Rambaud and Pérez[17][18], an algebraically-defined accounting (data capture and verification) practice may systematically automate the decision procedures for the following activities:

- Decide how to classify the data collected and send the collected data to relevant data processing workflows.

- Whether a given data set is considered admissible or not. This is judged in terms of its data formats and legal value ranges.

- Whether a transaction process is allowable, or not. This include whether a given transaction is feasible, in relevant operational/business logics.

Deployment Process

A pragmatic way of deploying accountable data assets is leveraging the complementary immutable features of past history, the unpredictable nature of future events, and the duality or even three-layered aspects of computation (the connections between Proof, Program Execution, and Grammar) to verify and validate the correctness of data content based on a common blockchain, which assigns a singular ordering sequence property to all data elements. The plan is to use a generalized workflow to iteratively refine and optimize the content structure of data assets in a networked environment that assigns sociophysical meaning to data.

Controlling the Logic of Deployment using Automated Tests

Unlike traditional testing practices, the controlling gate is based on a senior developers' reputation, or a central administration's authority, to approve test results to deploy a piece of code to the public. In this proposed framework, data assets, source code, and test cases are considered to be named data assets to be nominated or pledged by supporting parties by their own account identities. All accounts, whether it is an Externally Owned Account, or Programmable Account, can sign off on a data content release to a particular stage of qualification based on a Ricardian Contract. This is a direct application of using the well-known Smart Contract infrastructure to control software production workflow, or content release workflow, at a much larger scale. Clearly, the total number of participants to release any given piece of data can be controlled by the Smart Contract, which could be bound to certain well-defined versioning systems to make the Smart Contract a Ricardian Contract.

Lambda Calculus and Curry-Howard-Lambek Correspondence

The second layer of this framework is integrating test cases and test case execution using the well-known mathematical observation called the Curry-Howard-Lambek Correspondence. This allows one to see that certain pieces of data would be submitted through predefined execution procedures, which are pieces of data that have a timestamp associated with them, and their processing of certain input data content would be transformed into certain output data content that signifies their judgments on the input data. By recording the executing trail of input data with their processing results of the test procedures, this trail could constitute a form of automated verification or validation of the system.

Hermeneutical Circle and Data Configurations

Think of software or a data content product between a configuration management exercise. Then, the notion of Hermeneutics (this term refers to the theory and methodology of text interpretation, originally the interpretation of biblical texts, wisdom literature, and philosophical texts, but it can definitely to applied to data interpretation.), can be applied here (The following diagram is extracted from The Life and Works of Luca Pacioli ):[19]

:

In the Hermeneutical diagram:

- Configuration refers to the data content to be exposed to the public.

- Refiguration refers to data content that is being challenged and modified.

- Prefiguration refers to data content that is being proposed and will be processed through existing test procedures.

These three distinctive phases can be managed using configuration management tools, such as Git or Fossil, as a version control database to keep the cycles of Hermeneutic evolutions moving forward in a controlled manner.

Proof, Program Execution, and Grammar

Following the assumptions in the Curry-Howard-Lambek Correspondence, the notions of Proof, Program Execution, and Grammar are three aspects of a unifying system. These aspects of data content knowledge would only be "proven" if they are represented in their respective domain. The convenient fact is that these three data structures could be explicitly defined and be given appropriate abstractions within their domains. The work of managing software products can follow a compact universal data classification scheme and keep using the tools and methods provided by the three domains to prove, execute, and define the structural nature of every data element.

Physical, Social and Operationalized Meaning of Data

Once we have a generalized theoretical framework to manage abstract data, we need a concrete platform to assign physical meaning to data. In this case, we want to assign timestamps derived from a common blockchain to data packages. This will provide a consistent mechanism to note the latest modification time. This order-asserting property will help distinguish the structure of data dependency based on the temporal ordering, as well as the source of changes in terms of account addresses (Externally Owned Accounts or Programmable Accounts) mentioned before. In other words, this framework assigns physical meaning to data through timestamps, and social meaning to data through account addresses. Therefore, data assets and data content will naturally be associated with adequate accountability.

Operationalized Meaning of Data

Data can be manipulated with a large number of heterogeneous computers operating in various locations and configurations. This can have significant performance and data accuracy/consistency implications. From a PKC point of view, individual consumers of data content should not be burdened with sophisticated technologies and massive energy consumption/data storage requirements, thus leading to thinking of how data can be compressed and refreshed with adequate economic concerns as a built-in function, hidden away from everyone's usage. After the innovation of Docker-like container virtualization technologies, it became possible to operationalize data manipulation across a large number of computing architectures and configurations with a configuration consistency. This minimizes the discrepancies of data manipulation by assuming all virtualized computers will not distort data content and functional correctness. Moreover, a mechanism, such as Fossil, which uses a relational data model (SQLite as a data repository) to manage files and data version history, can be a universal model to manage data in a recursively distributed manner.

Interoperability of PKC

Given that all data content can be associated with physical, social, and operationalized meanings in a rigorous way, grounded in lambda calculus, it is conceivable that the composability of data can be attained in a computational framework that associates humans, human agencies, and technically verified procedures/data content. This would be a useful framework to reduce uncertainty in data management, therefore enhancing the exchangeability of data and source code. More importantly, every PKC can be associated with these data management tools and infrastructures. Knowing each PKC instance qualifies or signifies a specific version of the software and/or knowledge content, thus, information consistency can be reached with minimal third party intervention. Effectively, PKC can be used as the domain-neutral data management operating system that keeps data content consistent without leaking information beyond the system-prescribed perimeters.

Open Format over Open Source

Contrary to common belief, Open Format is more important than Open Source, since source code can be a major burden to be parsed, understood, and tested for validity. However, formats of information are by definition explicit and need to be open enough to be easily understood and to encourage interchange. Therefore, a key-value pair-based format could be a foundational design building block to support the Open Format idea. This requires a thorough and rigorous adoption of Open API Specification, as well as the JSON/YAML key-value pair-based data format and declarative syntax for specifying data assets across File, Page, and Service data domains.

The three objectives at G20 2022

To enable Inter-organizational workflow or data exchanges, according to Luhut Panjaitan, the Coordinating Ministry for Maritime and Investments Affairs of Indonesia, also the hosting nation for the G20 in 2022, the Science of Governance should be spread through self-administered Data as prescribed in the following steps:

- The creation of a data storage and manipulation instrument (PKC) to enable a personalized and scalable containment of data assets.

- The creation of publicly available training programs and publishable curriculum to promote the understanding of governance through data.

- Invite and work with global industry standards bodies to set adequate and fair data standards for all national participants.

Only with non-proprietary data asset management instrumentation, open and public educational programs on the value of data, and industry-wide data exchange protocols to be open for public examinations, can one really anticipate a continuous flow of data assets to operate with minimal friction. Otherwise, the world would continue to see chaos not only due to friction, but information asymmetry as well.

Conclusion

This article proposes a system composition/decomposition strategy with an algebraic programming model. It also presented a sample implementation, namely PKC as a self-sufficient building block of an inter-organizational data transaction system. It also borrowed the concept of "accounting practice" and its formal mathematical framework to ensure accountability and data consistency. The proposed framework differs from existing blockchains or Web3 systems in the following ways:

- It is a hyperlinked data asset management framework that uses key-value pairs to link content data, source code, and executable binary data images in one consistently abstracted workflow. The notion of key-value pairs is also the building block of Lambda calculus, which provides a model of functional composition and can be applied to represent workflows. This workflow model allows anyone to reuse the content knowledge, source code, and operational experience of the PKC community. It allow organizations of any size to operate their own data asset management infrastructure using a chosen branch of this open sourced framework of data asset management.

- The framework uses a data-driven (declarative) programming model that integrates content, executable functions, and networked data services as nothing but key-value pairs, so that it will simply grow and refine its own logical integrity as more key-value pairs are being accumulated. In other words, PKC is a scale-free and domain-neutral learning system that will naturally evolve its own structure and content as these key-value pairs are being added to its data asset repository. Both good and bad results can be transparently reused by all other parties.

- It uses web-browser oriented data abstraction that presents all data assets in terms of page abstraction, so a universal namespace and data presentation mechanism covers all usage scenarios while remaining compatible to other universal data abstractions, such as file and service abstractions. This page, file, and service abstraction combo is defined and programmed into the web using the key-value pair programming model, and therefore offers the maximum reach in terms of participants and data consumption parties.

TBD

Since the appearance of the World Wide Web in 1995, world affairs have been transformed by ever-faster electronic data transaction activities. This data-driven phenomenon created an unprecedented global supply network that can be considered as a singular inter-connected web of data transaction activities. Up to year the 2022[20], this data-driven supply network favors organizations or people who have deep pockets and access to more advanced Information Technologies. The competitive edge distinguished by wealth and technology literacy induced many unfair practices and even unethical and/or illegal transaction activities at a global scale. To resolve this issue, this article presents Personal Knowledge Container (PKC) as a self-administered cloud computing service which reduces the unfair competitive edges and reduces the cost of system participation or system operation that would be necessary to address many fundamental issues caused by information asymmetry.

Recent developments in blockchain and Decentralized identifier technologies coupled with web-based applications and 4G/5G connected devices created a technical infrastructure that could significantly reduce the degree of unfairness/information asymmetry in the global marketplace. Anyone with access to an Internet-connected web-browsing device have been able to not just participate in the global supply network, but also learn and operate their own business with minimal entry barrier. To continuously introduce late-breaking Information Technologies to the broadest possible range of users, the world needs to present a user experience through popularly-available web browsers that will present a wide range of data formats, including natural language annotations and timely workflow instructions. Most most importantly, the world needs to have a "fair" data security model that protects the interests of all participants in a transparent[21] way.

Why and How does this framework differs from existing approaches

Existing web application frameworks are often developed and operated by highly skilled software development and operational teams that serves a specific set of profit attaining objectives. Each instance of web service will have a highly localized and protected set of operational data. This operational data, and software configuration knowledge is a piece of privately owned asset that is usually protected and not shared to the public. In contact, PKC differs from existing data transaction systems, often known as Infrastructure as Code(IaC) in the following way:

Most people simply cannot believe it can be this simple

Key-value pairs, or hyperlinked data content is the simplest, yet universal data type that connects our world and minds. When this universal instrument is made explicit and integrated with self-documented technical arguments to continuously explore and explain the opportunities for improvement, this data management management framework, and its derived data management tools, such as PKC can continuously improve its system correctness while accelerating all activities supported by PKC.

Simplicity enables massive and decentralized/distributed adoption, and generate trust-worthy data