Difference between revisions of "Backup and Restore"

| (52 intermediate revisions by the same user not shown) | |||

| Line 1: | Line 1: | ||

=Introduction= | =Introduction= | ||

For [[PKC]] related data backup/restore, see [[Backup_and_Restore_Loop]]. | |||

To ensure this MediaWiki's content will not be lost<ref name="Woziak on Apple">{{:Video/Steve Jobs never understood the computer part: Wozniak - ET Exclusive}}</ref><ref extends="Woziak on Apple">{{:The Standard Question in Data Science}}</ref>, we created a set of script and put it in $wgResourceBase's extensions/BackupAndRestore directory. | |||

The main challenge is to ensure both textual data and binary files are backed up and restored. There are four distinct steps: | |||

#[[Backup_and_Restore#Database_Backup|Official Database Backup Tools]] | |||

#[[Backup_and_Restore#Media_File_Backup|Official Media File Backup Tools]] | |||

#[[Backup_and_Restore#Restoring Binary Files|Restoring Binary Files]] | |||

#[[Backup_and_Restore#Restoring SQL Data|Restoring SQL Data]] | |||

It is necessary to study the notion File Backend<ref>[[mw:FileBackend_design_considerations|MediaWiki's File backend design considerations]]</ref> for Mediawiki: such as [[mw:Manual:CopyFileBackend.php|CopyFileBackend.php]]. | |||

For specific data loading scripts, please see:[[ImportTextFiles.php]] | |||

== | ==Database Backup== | ||

For textual data backup, the fastest way is to use "mysqldump". The more detailed instructions can be found in the following link: <ref>[https://www.mediawiki.org/wiki/Manual:Backing_up_a_wiki MediaWiki:Manual:Backing up a wiki]</ref> | |||

To backup all the uploaded files, such as images, pdf files, and other binary files, you can reference the following Stack Overflow answer<ref>[https://stackoverflow.com/questions/1002258/exporting-and-importing-images-in-mediawiki Stack Overflow: Exporting and Importing Images in MediaWiki]</ref> | |||

In the [[PKC]] docker-compose configuration, the backup file should be dumped to <code>/var/lib/mysql</code> for convenient file transfer on the host machine of Docker runtime. | |||

Example of the command to run on the Linux/UNIX shell: | |||

= | mysqldump -h hostname -u userid -p --default-character-set=whatever dbname > backup.sql | ||

For | For running this command in PKC's docker implementation, one needs to get into the Docker instance using something like: | ||

docker exec -it pkc-mediawiki-1 /bin/bash (<code>pkc-mediawiki-1</code> may be replace by <code>xlp_mediawiki</code>) | |||

Whem running this command on the actual database host machine, <code>hostname</code> can be omitted, and the rest of the parameters are explained below: | |||

mysqldump -u <code>wikiuser</code> -p<code>PASSWORD_FOR_YOUR_DATABASE</code> <code>my_wiki</code> > backup.sql | |||

(note that you should '''NOT''' leave a space between -p and the passoword data) | |||

Substituting hostname, userid, whatever, and dbname as appropriate. All four may be found in your LocalSettings.php (LSP) file. hostname may be found under <code>$wgDBserver;</code> by default it is localhost. userid may be found under <code>$wgDBuser</code>, whatever may be found under <code>$wgDBTableOptions</code>, where it is listed after <code>DEFAULT CHARSET=</code>. If whatever is not specified mysqldump will likely use the default of utf8, or if using an older version of MySQL, latin1. While dbname may be found under <code>$wgDBname</code>. After running this line from the command line mysqldump will prompt for the server password (which may be found under Manual:$wgDBpassword in LSP). | |||

For your convenience, the following instruction will compress the file as it is being dumped out. | |||

mysqldump -h hostname -u userid -p dbname | gzip > backup.sql.gz | |||

===Dump XML file=== | |||

One may use [[mw:Manual: dumpBackup.php|dumpBackup.php]] to back up textual content into a single XML file. Make sure that the following command is run at the <code>/var/www/html</code> directory. | |||

<syntaxhighlight lang=bash> | |||

php maintenance/dumpBackup.php --full --quiet > ./images/yourFileName.xml | |||

</syntaxhighlight> | |||

= | ==Media File Backup== | ||

Before running the [[PHP]] maintenance script [[mw:Manual:dumpUploads.php|dumpUploads.php]], you must first create a temporary working directory: | |||

<code>mkdir /tmp/workingBackupMediaFiles</code> | |||

It is common to have files not being dumped out, due to errors caused by escape characters in File names. This will be resolved in the future. | |||

You must first go to the proper directory, in the case of standard [[PKC]] configuration, you must make sure you launch the following command at this location <code>/var/www/html</code>: | |||

php maintenance/dumpUploads.php | [[sed]] -e '/\.\.\//d' -e "/'/d" | [[xargs]] --verbose cp -t /tmp/MediaFiles | |||

Note that the second filtering expression tries to eliminate files with <code>'</code> character in the file names. After dumping all the files to the <code>MediaFiles</code> directory, make sure that you check whether there are [[files missing]]. | |||

Then, compress the file in a zip directory. | |||

<code>zip -r ~/UploadedFiles_date_time.zip /tmp/MediaFiles</code> | |||

Remember to remove the temporary files and its directory. | |||

<code>rm -r /tmp/MediaFiles</code> | |||

It is very useful to learn more about [[xargs]] and [[sed]] Unix commands. | |||

==Restoring Binary Files== | ==Restoring Binary Files== | ||

Loading binary files to MediaWiki, one must use a maintenance script in the /maintenance directory. This is the command line information. It needs to be launched in the container that runs MediaWiki instance. | Loading binary files to MediaWiki, one must use a maintenance script in the /maintenance directory. This is the command line information. It needs to be launched in the container that runs MediaWiki instance. | ||

| Line 90: | Line 69: | ||

After all files are uploaded, one should try to run a maintenance scrip on the server that serves Mediawiki service: | After all files are uploaded, one should try to run a maintenance scrip on the server that serves Mediawiki service: | ||

php $ResourceBasePath/maintenance/rebuildImages.php | php $ResourceBasePath/maintenance/rebuildImages.php | ||

For more information, please refer to MediaWiki's documentation on [[MW:Manual:rebuildImages.php|Manual:rebuildImages.php]]. | For more information, please refer to MediaWiki's documentation on [[MW:Manual:rebuildImages.php|MW:Manual:rebuildImages.php]]. | ||

= | ==Restoring SQL Data== | ||

The following instruction should be launched in the host (through docker exec or kubectl exec -it command) of the container that hosts the mariadb/mysql service. | |||

mysql -u $DATABASE_USER -p $DATABASE_NAME < BACKUP_DATA.sql | |||

If the file is very large, it might have been compressed in to <code>gz</code> or <code>tar.gz</code> form. Then, just use the piped command to first uncompress it and directly send it to <code>msql</code> program for data loading. | |||

gunzip -c BACKUP_DATA.sql.gz | mysql -u $DATABASE_USER -p $DATABASE_NAME | |||

===Load XML file=== | |||

One may use [[mw:Manual:importDump.php|importDump.php]] to restore textual content from a single XML file. Make sure that the following command is run at the <code>/var/www/html</code> directory. | |||

<syntaxhighlight lang=bash> | |||

php maintenance/importDump.php < yourFileName.xml | |||

</syntaxhighlight> | |||

=Industry Solutions= | |||

It would be useful to see how other technology providers solves the Backup and Restore problems. For instance [[Kasten]] can be a relevant solution. | |||

==Backup and Restore Loop== | |||

{{:Backup and Restore Loop}} | |||

=References= | =References= | ||

<references /> | <references /> | ||

=Related Pages= | |||

[[Category:MediaWiki Maintenence]] | |||

[[Category:Backup and Restore]] | |||

[[Category:Site Reliability Engineering]] | |||

Latest revision as of 11:14, 13 June 2022

Introduction

For PKC related data backup/restore, see Backup_and_Restore_Loop.

To ensure this MediaWiki's content will not be lost[1]Cite error: Invalid <ref> tag; invalid names, e.g. too many, we created a set of script and put it in $wgResourceBase's extensions/BackupAndRestore directory.

The main challenge is to ensure both textual data and binary files are backed up and restored. There are four distinct steps:

- Official Database Backup Tools

- Official Media File Backup Tools

- Restoring Binary Files

- Restoring SQL Data

It is necessary to study the notion File Backend[2] for Mediawiki: such as CopyFileBackend.php.

For specific data loading scripts, please see:ImportTextFiles.php

Database Backup

For textual data backup, the fastest way is to use "mysqldump". The more detailed instructions can be found in the following link: [3]

To backup all the uploaded files, such as images, pdf files, and other binary files, you can reference the following Stack Overflow answer[4]

In the PKC docker-compose configuration, the backup file should be dumped to /var/lib/mysql for convenient file transfer on the host machine of Docker runtime.

Example of the command to run on the Linux/UNIX shell:

mysqldump -h hostname -u userid -p --default-character-set=whatever dbname > backup.sql

For running this command in PKC's docker implementation, one needs to get into the Docker instance using something like:

docker exec -it pkc-mediawiki-1 /bin/bash (pkc-mediawiki-1may be replace byxlp_mediawiki)

Whem running this command on the actual database host machine, hostname can be omitted, and the rest of the parameters are explained below:

mysqldump -uwikiuser-pPASSWORD_FOR_YOUR_DATABASEmy_wiki> backup.sql (note that you should NOT leave a space between -p and the passoword data)

Substituting hostname, userid, whatever, and dbname as appropriate. All four may be found in your LocalSettings.php (LSP) file. hostname may be found under $wgDBserver; by default it is localhost. userid may be found under $wgDBuser, whatever may be found under $wgDBTableOptions, where it is listed after DEFAULT CHARSET=. If whatever is not specified mysqldump will likely use the default of utf8, or if using an older version of MySQL, latin1. While dbname may be found under $wgDBname. After running this line from the command line mysqldump will prompt for the server password (which may be found under Manual:$wgDBpassword in LSP).

For your convenience, the following instruction will compress the file as it is being dumped out.

mysqldump -h hostname -u userid -p dbname | gzip > backup.sql.gz

Dump XML file

One may use dumpBackup.php to back up textual content into a single XML file. Make sure that the following command is run at the /var/www/html directory.

php maintenance/dumpBackup.php --full --quiet > ./images/yourFileName.xml

Media File Backup

Before running the PHP maintenance script dumpUploads.php, you must first create a temporary working directory:

mkdir /tmp/workingBackupMediaFiles

It is common to have files not being dumped out, due to errors caused by escape characters in File names. This will be resolved in the future.

You must first go to the proper directory, in the case of standard PKC configuration, you must make sure you launch the following command at this location /var/www/html:

php maintenance/dumpUploads.php | sed -e '/\.\.\//d' -e "/'/d" | xargs --verbose cp -t /tmp/MediaFiles

Note that the second filtering expression tries to eliminate files with ' character in the file names. After dumping all the files to the MediaFiles directory, make sure that you check whether there are files missing.

Then, compress the file in a zip directory.

zip -r ~/UploadedFiles_date_time.zip /tmp/MediaFiles

Remember to remove the temporary files and its directory.

rm -r /tmp/MediaFiles

It is very useful to learn more about xargs and sed Unix commands.

Restoring Binary Files

Loading binary files to MediaWiki, one must use a maintenance script in the /maintenance directory. This is the command line information. It needs to be launched in the container that runs MediaWiki instance.

Load images from the UploadedFiles location. In most cases, the variable $ResourceBasePath string can be replaced by /var/www/html.

cd $ResourceBasePath php $ResourceBasePath/maintenance/importImages.php $ResourceBasePath/images/UploadedFiles/

After all files are uploaded, one should try to run a maintenance scrip on the server that serves Mediawiki service:

php $ResourceBasePath/maintenance/rebuildImages.php

For more information, please refer to MediaWiki's documentation on MW:Manual:rebuildImages.php.

Restoring SQL Data

The following instruction should be launched in the host (through docker exec or kubectl exec -it command) of the container that hosts the mariadb/mysql service.

mysql -u $DATABASE_USER -p $DATABASE_NAME < BACKUP_DATA.sql

If the file is very large, it might have been compressed in to gz or tar.gz form. Then, just use the piped command to first uncompress it and directly send it to msql program for data loading.

gunzip -c BACKUP_DATA.sql.gz | mysql -u $DATABASE_USER -p $DATABASE_NAME

Load XML file

One may use importDump.php to restore textual content from a single XML file. Make sure that the following command is run at the /var/www/html directory.

php maintenance/importDump.php < yourFileName.xml

Industry Solutions

It would be useful to see how other technology providers solves the Backup and Restore problems. For instance Kasten can be a relevant solution.

Backup and Restore Loop

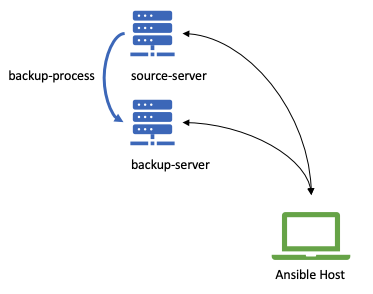

PKC Automatic Backup and Restore Process is a series of command executed on backup-source server and backup-target server. The target of the process loop is to ensure there are always a backup data that can be easily restored into target server. Below is the schematic diagram on how the process is executed.

Introduction

Configuration process is done by ansible script, that is executed from ansible host. Below are the outline of deployment process in general

- Get the source code; From github to your local machine. The source code will contain all necessary ansible script to execute the deployment process.

- Adjust the configuration; Adjust configuration on your local machine to define the backup-and-restore loop

- Execute Backup Process; Execute backup installation

- Execute Restore Process; Execute restore installation

Step to deploy

Below are the step to perform Backup and Restore Loop from ansible agent machine. You need to install ansible on your local machine before you can execute the ansible script, please find the comprehensive methode of installing ansible on Ansible Installation

Get the source code

Download the source code from github link below

git clone https://github.com/xlp0/PKC

Adjust the configuration

The source code of PKC code is consist of below directory, there are two files that need to adjust before we cant start performing Backup-Restore-Loop. Those files are :

- host-restore in ./resource/config

- cs-restore-remote.yml in ./resource/ansible-yml

.

└── PKC/

└── resource/

├── ansible-yml

├── config

└── script

file host-restore, please replace values with your specific case.

[your-source-server] ansible_connection=ssh ansible_ssh_private_key_file=[.pem] ansible_user=[user] domain=[src-domain] [your-destination-server] ansible_connection=ssh ansible_ssh_private_key_file=[.pem] ansible_user=[user] domain=[dest-domain]

file cs-restore-remote.yml. on header parts

- name: Backup and Restore Loop

hosts: all

gather_facts: yes

become: yes

become_user: root

vars:

- pkc_install_root_dir: "/home/ubuntu/cs/"

- src_server: [put-your-source-server-here]

- dst_server: [put-your-destiantion-server-here]

tasks:

... redacted

Execute Backup Restore Loop Process

To execute, please ensure that you have change the current folder into PKC's project root folder and paste below command.

ansible-playbook -i ./resources/config/hosts-restore ./resources/ansible-yml/cs-restore-remote.yml

As the output of this process is the latest backup of mySQL file and Mediawiki Image file in

PKC\resource\ansible-yml\backup\

Below is the table of output file

| File Name | Folder | Remarks |

|---|---|---|

| *.tar.gz | ./resource/ansible-yml/backup | latest backup file with timestamp in filename, each time the process is executed, will generate two backup files. Which are database backup file, and image backup file |

| restore_report.log | ./resource/ansible-yml/backup | Log of backup-restore-loop output. |

References

- ↑ Wozniak, Steve (Feb 26, 2018). Steve Jobs never understood the computer part: Wozniak - ET Exclusive. local page: The Economic Times.

- ↑ MediaWiki's File backend design considerations

- ↑ MediaWiki:Manual:Backing up a wiki

- ↑ Stack Overflow: Exporting and Importing Images in MediaWiki

https://docs.ansible.com/ansible/latest/installation_guide/intro_installation.html

References