Difference between revisions of "Inter-Organizational Workflow"

| (125 intermediate revisions by 4 users not shown) | |||

| Line 1: | Line 1: | ||

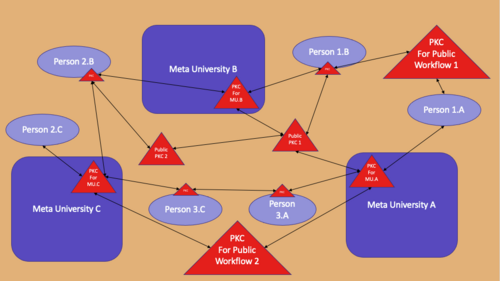

[[Inter-Organizational Workflow]], also known as [[pCycle]] or [[DevOps cycle]], is the data transforming process (a data manipulation program), conducted by a number of authenticated, and authorized [[PKC]]s (shown as the red triangles in the attached diagram), that allows personal users, and/or [[MU]]-compliant organizations, to trigger data exchange activities amongst [[PKC]]s. To govern activities across Inter-organization workflows, please see:[[the Booklet]] on the [[Science of Governance]]. The PDF of the booklet can be found here:[[File:SoG 0 1 4.pdf| PDF DOWNLOAD LINK]] | |||

The main proposition is that [[PKC]] as a data exchange platform, which provides a set of [[Open Format|common data exchange format]]s and a set of [[secure data transport]]s to enable market exchange activities amongst the [[PKC]] user-community. | |||

In other words, Inter-organizational [[workflow]] takes inputs from one or more instance of [[PKC]], and pass data content to the other [[PKC]]s. [[File:UnifyingDataWorkflow.png|500px|thumb|PKC Workflow connecting persons and institutions]] Individuals or [[MU-compliant]] organizations that utilizes [[PKC]]s will naturally share a number of industry-strength data exchange technologies, that enables data-content to flow across social boundaries in ways that are witnessed and traced by a consistent data infrastructure. Specifically, [[globally-accepted time-stamp services]] and an Inter-[[PKC]] messaging bus will be a crucial part of [[PKC]]-enabled Inter-organizational workflow. | |||

==PKC as Inter-Organizational Data Appliance== | |||

[[PKC]] is implemented as a new breed of [[data appliance]] that can include any number of database, data security, and data transport functionalities in principle. By standardizing one unified [[data appliance]] standard, enabling workflows across different social boundaries becomes relatively easy. At the time of this writing, a significant amount of businesses are already conducted using similar technologies, while assuming that running automated data services requires dedicated professional team members. [[PKC]] differs from that assumption by publishing the operational procedures, as well as the source code required to provision these data services as [[learnable activities]]. Therefore, competent individuals or organizations that can conduct these technical work can simply follow the published procedure to offer these services on the accumulated knowledge of the [[PKC]] user community. Therefore, [[Inter-organizational Workflow]] can also be thought of as: [[PKC Workflow]] , [[MU Community Workflow]], or just simply [[PKC DevCycle]]. | |||

The role of [[PKC]] is similar to the functions of inter-blockchain bridging technologies that have been attempted by blockchain technology providers<ref>[https://blog.cosmos.network/ Blog of Cosmos Network]</ref>,<ref>[https://polkadot.network/ Official Website of Polkadot Network]</ref>. The difference here is that [[PKC]] is just a self-contained collection of software and data deployment tools, so that it should be able to adopt any inter-chain technologies that could eventually arise. | |||

Due to the inter-organization nature of the workflow, management of trust and reduction of verification efforts is the essence. Therefore, a significant amount of knowledge about conducting workflow functionalities is mostly related to [[Federated Identity Management]], or [[authentication]] and [[authorization]] protocols, such as [[OAuth]] is necessary to get it to work online. | |||

= Introduction= | |||

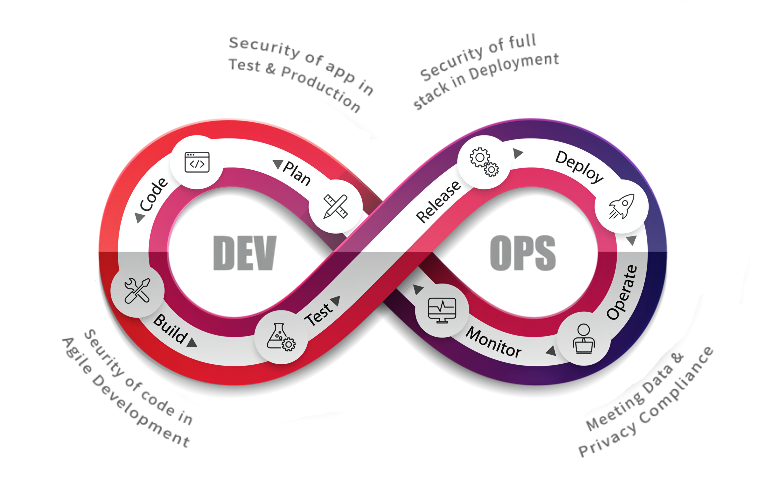

# [[ | The purpose of PKC Workflow, or the PKC DevOps Cycle, is about leveraging well-known data processing tools to manage source code, binary files in a unifying abstraction framework. A framework that treats data in three types of universal abstractions. | ||

# [[ | # File from the Past: All data collected in the past can be bundled in [[File]]s. | ||

# Page of the Present: All data to be interactively presented to data consuming agents are shown as [[Page]]s. | |||

# Service of the Future: All data to be obtained by other data manipulation processes are known as [[Service]]s. | |||

# | |||

By managing the classifying data assets in terms of files, pages, and services using version control systems, we can incrementally sift through data in three [[namespace]]s that intuitively labels the nature of data according to time progression, which reflects the [[partially ordered]] nature of PKC Workflow. This approach to iteratively cleanse data is also known as [[DevOps cycle]]s. | |||

Given the above-mentioned assumptions, data assets can be organized according to these three types in the following way: | |||

# Files can be managed using [[Content-addressing]] networks, their content will not be changeable, since they can be stored in [[IPFS]] format. | |||

# Pages are composed of data content, style templates, and UI/UX code. This requires certain test and verification procedure to endure their healthy operations. The final delivery of data content presentation often needs to be dynamically adjusted to users' device and the operating context of the device. Therefore, pages are considered to be deployed data assets. | |||

# | # Services are programs that have well-known names and should be provisioned on computing devices with service quality assessment. They are usually associated with [[Docker]]-like container technologies and have published names registered in places like [[Docker hub]]. As service provisioning technologies mature, tools such as [[Kubernetes]] will be managing services with service quality real-time updates, so that the behavior of data services can be tracked and diagnosed with industry-strength protocols. | ||

# | |||

# | |||

=PKC Workflow in a time-based naming convention= | |||

Given the complexity of possible data deployment scenarios, we will show that all these complexity can be compressed down to a unifying process abstraction, all approximated using [[PKC]] as a container for various recipes to cope with different use cases by treating all phenomenon in terms of time-stamped data content. Then, PKC provides an extensible dictionary to continuously define the growing vocabulary, at the same time, provides computable representations to solutions, given that these computing results are deployed using computing services that knows how many users and how much data processing capacity that it can mobilize to accomplish its demand. In other words, under this three-layered (File, Page, Service) classification system, PKC workflow provides a fixed grounding metaphor, (Past, Present, Future) to deal with all data. | |||

[[File:DevOps.png]] | |||

=== Automated Workflow === | |||

The main idea of [[PKC]] is to create a stable (invariant) process that captures data as a person or a project accumulate their operational experience. The invariant/stable process will be like a [[mobius strip]], a never ending cycle that keeps data in an iterative [[backup/restore cycle]]. | |||

=== Forming Productive Norms through Workflow === | |||

By establishing certain patterns in the inter-organizational workflows, the interactions between organizations and individuals will necessarily react to the norms established throughout the community. Therefore, introducing well-thought-out policies and incentive programs will be a crucial element in helping individuals and participants to adapt their behavior and localized programs to work accordingly. For instance, since the data industry is converging to the use of [[YAML]] as a standard declarative language for container orchestration and system administration (Ansible) tasks, by standardizing the data exchanges to use [[YAML]] as a standard across [[MU]] community, will guide individual users to become more familiar with [[YAML]], and even develop more tools and template for [[YAML]] in their local instances of [[PKC]]s. Similarly, [[PKC]] is a data store of templates and content modules, therefore, publishing and promoting standards in templates and content modules will significantly influence the way data content in [[PKC]]s are to be accumulated overtime. These types of work must be carefully planned and crafted as a part of [[Data Architecture]] considerations. | |||

== | == PKC Devops Strategy == | ||

There will be three public domains to test the workflow: | |||

#[http://pkc-dev.org pkc-dev.org] for developmental tests | |||

#[http://pkc-ops.org pkc-ops.org] for candidate service deployment | |||

#[http://pkc-back.org pkc-back.org] for production data backup data demonstration | |||

# | |||

# | |||

[ | |||

'''Task 1 - done''' <br/> | |||

Upgrade current container to Mediawiki 1.35 to latest stable version [1.37.1], [https://www.mediawiki.org/wiki/Download Ref], check for compatibility with related extension, and create pre-built container image to local machine <br/> | |||

* Matomo Spatial Feature to update '''done''' | |||

* Install Youtube extension '''done''' | |||

'''Task 2 - done''' <br/> | |||

Implement the container into all servers, ensure to work with ansible script and download the pre-built image into cloud machine. Ensure it is working with Matomo, and Keycloak. | |||

'''Task 3 - done''' <br/> | |||

Import content from pkc.pub, and try to convert EmbeddVideo into Youtube Extension, or find newer extension to support Video Embedding. | |||

'''Task 4''' <br/> | |||

Connecting PKC Local Installation to use confederated account to pkc-mirror.de Keycloak instances, and test for functionality. | |||

'''Task 5''' <br/> | |||

Updating the list of media files that are allowed to be uploaded to the server, specifically allowing '''svg''' to be uploadable. This can be tested using the draw.io extension. | |||

'''Task 6''' <br/> | |||

Provide a mechanism to allow programmability in using API calls across various computing services in [[PKC]]. Ideally, allowing a kind of [[Workflow]] or [[Smart Contract]] to be programmable and testable within the [[Federated PKC]] system. | |||

'''Task 7''' <br/> | |||

Think of using blockchain infrastructures, especially [[inter-chain]]/[[parachain]] infrastructures, such as [[Cosmos]] and [[Polkadot]] as existing solutions to implement inter-organizational governance. | |||

Please see the details of the process in [[XLP_DevOps_Process|this page.]] | |||

For known issues log, please refer to [[Devops Known Issues]] | |||

==A reference model inspired by Accounting Practice== | |||

In a paper series<ref>{{:Paper/Quantum Information and Accounting Information: A Revolutionary Trend and the World of Topology}}</ref> called [[Paper/Quantum Information and Accounting Information: A Revolutionary Trend and the World of Topology|Quantum Information and Accounting Information]], Fitzgerald et al. presented a strong tie between topological representation of data, and accounting information. This paper even presented a mobius strip that resembles the idea of [[DevOps]] in a visually similar manner. For proper modeling of possible workflow behavior, it will be useful to reference [[Actor Model]]<ref>{{:Video/Hewitt, Meijer and Szyperski: The Actor Model (everything you wanted to know...)}}</ref>. | |||

== Notes == | |||

* When writing a logic model, one should be aware of the difference between concept and instance. | |||

* A logic model is composed of lots of submodels. When not intending to specify the abstract part of them, one could only use Function Model. | |||

* What is the relationship between the model submodules, and the relationships among all the subfunctions? | |||

* Note: Sometimes, the input and process are ambiguous. For example, the Service namespace is required to achieve the goal. It might be an input or the product along the process. In general, both the input and process contain uncertainty and need a decision. | |||

* The parameter of Logic Model is minimized to its name, which is the most important part of it. The name should be summarized from its value. | |||

* Note that, when naming as Jenkins, it means the resource itself, but when naming as Jenkin Implementation On PKC, it consists of more context information therefore is more suitable. | |||

* I was intending to name "PKC Workflow/Jenkins Integration" for the PCK Workflow's submodel. However, a more proper name might be Task/Jenkins Integration, and then take its output to PKC Workflow/Automation. The organization of the PKC Workflow should be the project, and the Workflow should be the desired output of the project. The Task category is for moving to that state. So the task could be the process of a Project, and the output of the task could serve as the process of the workflow. | |||

* Each goal is associated with a static plan and dynamic process. | |||

* To specify input and output from a logic model, we could get the input/output on every subprocess in the process (by transclusion) | |||

* I renamed some models | |||

** TLA+ Workflow -> System Verification | |||

** Docker Workflow -> Docker registry | |||

** Question: How should we name? Naming is a kind of summarization that loses information. | |||

==Reference== | ==Reference== | ||

<references/> | |||

=Related Pages= | |||

[[Category:DevOps]] | |||

[[Category:Topology]] | |||

[[Category:Accounting]] | |||

[[Category:Federated Identity Management]] | |||

[[Category:PKC Workflow]] | |||

[[Category:Business Pattern]] | |||

Latest revision as of 04:42, 20 December 2022

Inter-Organizational Workflow, also known as pCycle or DevOps cycle, is the data transforming process (a data manipulation program), conducted by a number of authenticated, and authorized PKCs (shown as the red triangles in the attached diagram), that allows personal users, and/or MU-compliant organizations, to trigger data exchange activities amongst PKCs. To govern activities across Inter-organization workflows, please see:the Booklet on the Science of Governance. The PDF of the booklet can be found here:File:SoG 0 1 4.pdf

The main proposition is that PKC as a data exchange platform, which provides a set of common data exchange formats and a set of secure data transports to enable market exchange activities amongst the PKC user-community.

In other words, Inter-organizational workflow takes inputs from one or more instance of PKC, and pass data content to the other PKCs.

Individuals or MU-compliant organizations that utilizes PKCs will naturally share a number of industry-strength data exchange technologies, that enables data-content to flow across social boundaries in ways that are witnessed and traced by a consistent data infrastructure. Specifically, globally-accepted time-stamp services and an Inter-PKC messaging bus will be a crucial part of PKC-enabled Inter-organizational workflow.

PKC as Inter-Organizational Data Appliance

PKC is implemented as a new breed of data appliance that can include any number of database, data security, and data transport functionalities in principle. By standardizing one unified data appliance standard, enabling workflows across different social boundaries becomes relatively easy. At the time of this writing, a significant amount of businesses are already conducted using similar technologies, while assuming that running automated data services requires dedicated professional team members. PKC differs from that assumption by publishing the operational procedures, as well as the source code required to provision these data services as learnable activities. Therefore, competent individuals or organizations that can conduct these technical work can simply follow the published procedure to offer these services on the accumulated knowledge of the PKC user community. Therefore, Inter-organizational Workflow can also be thought of as: PKC Workflow , MU Community Workflow, or just simply PKC DevCycle.

The role of PKC is similar to the functions of inter-blockchain bridging technologies that have been attempted by blockchain technology providers[1],[2]. The difference here is that PKC is just a self-contained collection of software and data deployment tools, so that it should be able to adopt any inter-chain technologies that could eventually arise.

Due to the inter-organization nature of the workflow, management of trust and reduction of verification efforts is the essence. Therefore, a significant amount of knowledge about conducting workflow functionalities is mostly related to Federated Identity Management, or authentication and authorization protocols, such as OAuth is necessary to get it to work online.

Introduction

The purpose of PKC Workflow, or the PKC DevOps Cycle, is about leveraging well-known data processing tools to manage source code, binary files in a unifying abstraction framework. A framework that treats data in three types of universal abstractions.

- File from the Past: All data collected in the past can be bundled in Files.

- Page of the Present: All data to be interactively presented to data consuming agents are shown as Pages.

- Service of the Future: All data to be obtained by other data manipulation processes are known as Services.

By managing the classifying data assets in terms of files, pages, and services using version control systems, we can incrementally sift through data in three namespaces that intuitively labels the nature of data according to time progression, which reflects the partially ordered nature of PKC Workflow. This approach to iteratively cleanse data is also known as DevOps cycles.

Given the above-mentioned assumptions, data assets can be organized according to these three types in the following way:

- Files can be managed using Content-addressing networks, their content will not be changeable, since they can be stored in IPFS format.

- Pages are composed of data content, style templates, and UI/UX code. This requires certain test and verification procedure to endure their healthy operations. The final delivery of data content presentation often needs to be dynamically adjusted to users' device and the operating context of the device. Therefore, pages are considered to be deployed data assets.

- Services are programs that have well-known names and should be provisioned on computing devices with service quality assessment. They are usually associated with Docker-like container technologies and have published names registered in places like Docker hub. As service provisioning technologies mature, tools such as Kubernetes will be managing services with service quality real-time updates, so that the behavior of data services can be tracked and diagnosed with industry-strength protocols.

PKC Workflow in a time-based naming convention

Given the complexity of possible data deployment scenarios, we will show that all these complexity can be compressed down to a unifying process abstraction, all approximated using PKC as a container for various recipes to cope with different use cases by treating all phenomenon in terms of time-stamped data content. Then, PKC provides an extensible dictionary to continuously define the growing vocabulary, at the same time, provides computable representations to solutions, given that these computing results are deployed using computing services that knows how many users and how much data processing capacity that it can mobilize to accomplish its demand. In other words, under this three-layered (File, Page, Service) classification system, PKC workflow provides a fixed grounding metaphor, (Past, Present, Future) to deal with all data.

Automated Workflow

The main idea of PKC is to create a stable (invariant) process that captures data as a person or a project accumulate their operational experience. The invariant/stable process will be like a mobius strip, a never ending cycle that keeps data in an iterative backup/restore cycle.

Forming Productive Norms through Workflow

By establishing certain patterns in the inter-organizational workflows, the interactions between organizations and individuals will necessarily react to the norms established throughout the community. Therefore, introducing well-thought-out policies and incentive programs will be a crucial element in helping individuals and participants to adapt their behavior and localized programs to work accordingly. For instance, since the data industry is converging to the use of YAML as a standard declarative language for container orchestration and system administration (Ansible) tasks, by standardizing the data exchanges to use YAML as a standard across MU community, will guide individual users to become more familiar with YAML, and even develop more tools and template for YAML in their local instances of PKCs. Similarly, PKC is a data store of templates and content modules, therefore, publishing and promoting standards in templates and content modules will significantly influence the way data content in PKCs are to be accumulated overtime. These types of work must be carefully planned and crafted as a part of Data Architecture considerations.

PKC Devops Strategy

There will be three public domains to test the workflow:

- pkc-dev.org for developmental tests

- pkc-ops.org for candidate service deployment

- pkc-back.org for production data backup data demonstration

Task 1 - done

Upgrade current container to Mediawiki 1.35 to latest stable version [1.37.1], Ref, check for compatibility with related extension, and create pre-built container image to local machine

- Matomo Spatial Feature to update done

- Install Youtube extension done

Task 2 - done

Implement the container into all servers, ensure to work with ansible script and download the pre-built image into cloud machine. Ensure it is working with Matomo, and Keycloak.

Task 3 - done

Import content from pkc.pub, and try to convert EmbeddVideo into Youtube Extension, or find newer extension to support Video Embedding.

Task 4

Connecting PKC Local Installation to use confederated account to pkc-mirror.de Keycloak instances, and test for functionality.

Task 5

Updating the list of media files that are allowed to be uploaded to the server, specifically allowing svg to be uploadable. This can be tested using the draw.io extension.

Task 6

Provide a mechanism to allow programmability in using API calls across various computing services in PKC. Ideally, allowing a kind of Workflow or Smart Contract to be programmable and testable within the Federated PKC system.

Task 7

Think of using blockchain infrastructures, especially inter-chain/parachain infrastructures, such as Cosmos and Polkadot as existing solutions to implement inter-organizational governance.

Please see the details of the process in this page. For known issues log, please refer to Devops Known Issues

A reference model inspired by Accounting Practice

In a paper series[3] called Quantum Information and Accounting Information, Fitzgerald et al. presented a strong tie between topological representation of data, and accounting information. This paper even presented a mobius strip that resembles the idea of DevOps in a visually similar manner. For proper modeling of possible workflow behavior, it will be useful to reference Actor Model[4].

Notes

- When writing a logic model, one should be aware of the difference between concept and instance.

- A logic model is composed of lots of submodels. When not intending to specify the abstract part of them, one could only use Function Model.

- What is the relationship between the model submodules, and the relationships among all the subfunctions?

- Note: Sometimes, the input and process are ambiguous. For example, the Service namespace is required to achieve the goal. It might be an input or the product along the process. In general, both the input and process contain uncertainty and need a decision.

- The parameter of Logic Model is minimized to its name, which is the most important part of it. The name should be summarized from its value.

- Note that, when naming as Jenkins, it means the resource itself, but when naming as Jenkin Implementation On PKC, it consists of more context information therefore is more suitable.

- I was intending to name "PKC Workflow/Jenkins Integration" for the PCK Workflow's submodel. However, a more proper name might be Task/Jenkins Integration, and then take its output to PKC Workflow/Automation. The organization of the PKC Workflow should be the project, and the Workflow should be the desired output of the project. The Task category is for moving to that state. So the task could be the process of a Project, and the output of the task could serve as the process of the workflow.

- Each goal is associated with a static plan and dynamic process.

- To specify input and output from a logic model, we could get the input/output on every subprocess in the process (by transclusion)

- I renamed some models

- TLA+ Workflow -> System Verification

- Docker Workflow -> Docker registry

- Question: How should we name? Naming is a kind of summarization that loses information.

Reference

- ↑ Blog of Cosmos Network

- ↑ Official Website of Polkadot Network

- ↑ Demski, Joel; Fitzgerald, S.; Ijiri, Yuji; Ijiri, Yumi; Lin, Haijin (August 2006). "Quantum Information and Accounting Information: A Revolutionary Trend and the World of Topology" (PDF). local page.

- ↑ Hewitt, Carl (Nov 21, 2012). Hewitt, Meijer and Szyperski: The Actor Model (everything you wanted to know...). local page: jasonofthel33t.