The Booklet

The Science of Governance Booklet

Theory: the Science of Governance (SoG)

Context:Digital Transformation challenges Social Stability

Time is a universal medium to connect events; knowing when events happen is integral in our conception of everything. This is shown through sequencing and recording information, hence why both “time is money” and “knowledge is power.” Everyday, people around the world are persuaded by others who simply have more information. This is known as information asymmetry, where one entity has better access to knowledge while the other entity does not. Examples of this phenomenon range from car salespeople selling bad cars to unwary buyers, to developing countries getting overly high interest rates from lenders, crippling their economy. Information asymmetry can be used with good intentions, and could also lead to exploitation.

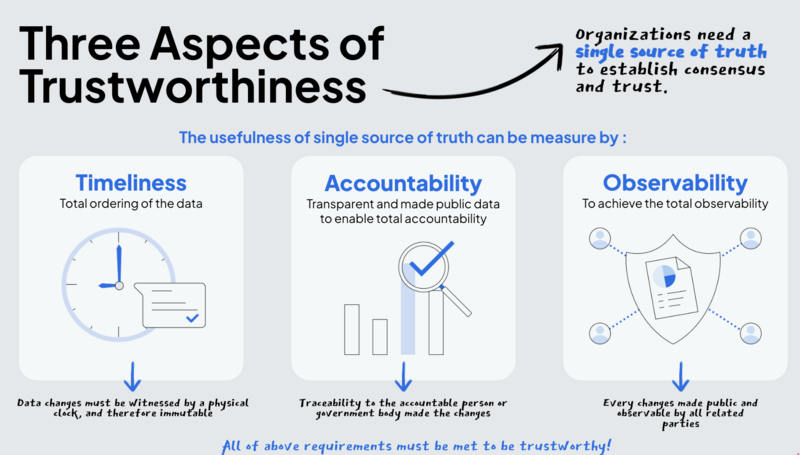

Currently, data processing technologies are increasing information asymmetry in ways that are becoming a major problem in public administration. Malicious and systematic data technology exploitation can be conducted by individuals or public institutions that have more access to data or data processing technologies. To ensure sustained justice in modern societies, the notion of governance must be grounded to the power of persuasion’s root: information asymmetry. We call this scientific endeavor the Science of Governance (SoG). SoG focuses on the fundamental properties of information asymmetry: the timeliness, accountability, and observability of public data.

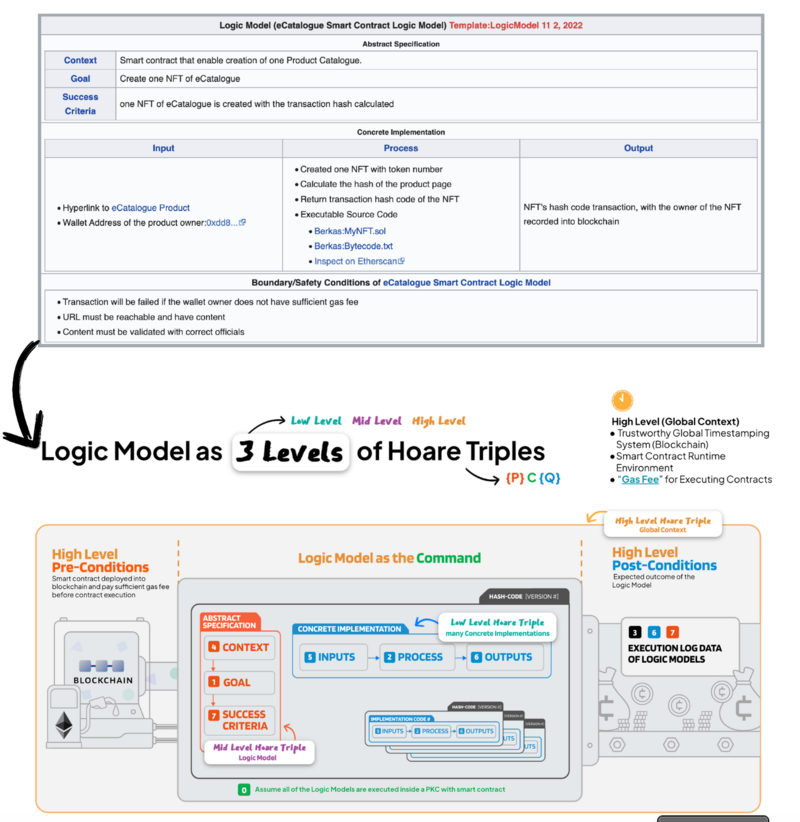

Power structures always needed tools and methods in guiding timely actions, creating accountability for policy outcomes, and observing progress. Models of thought were utilized to create and execute policies shaped by power structures. These include: “Objective and key results (OKR);” used to define measurable goals and measure outcomes, “Management by objectives (MBO);“ defining specific objectives and sequentially how to achieve each objective, and “logic models,” describing the chain of causes and effects leading to an outcome. Without adopting secure and field-tested information systems, political structures are still mostly functioning through verbal debates, creating their offline codes in human-readable texts only, and storing information on mediums that cannot be automatically checked and retrieved by relevant parties. A hyperlinked logic model is shown below:

| Logic Model (Science of Governance) Template:LogicModel 12 1, 2022 | ||||||

|---|---|---|---|---|---|---|

| ||||||

| ||||||

|

Large-scale digital technology uses these logic models; even modern information technology like smartphones and blockchains were built on a foundation of prior inventions and systems using similarly formatted models. A logic model is a domain-independent specification format allowing any self-governing body to utilize contemporary technologies to represent the timeliness of information distribution, accountability of data changes, and observability of policy outcomes. To start, existing governance tools such as the OKR/MBO models can be integrated with hyperlinked documents to help keep policies and execution results timely, accountable, and observable. To do this with a formalized framework, we name this new field, SoG.

Goal:Ensure Governance Correctness by Logic

SoG functions through establishing a fair and just political process in a world overwhelmed by the asymmetric distribution of data governance technologies. It provides a trustworthy foundation to help governing bodies allocate resources to execute policies efficiently, utilizing different aspects of established governance theories and creating new governance theories, while employing readily available technologies to deploy solutions in the real world. In other words, an End-to-End solution for governance must have a scientific basis that can be scaled up in applications through technology and have a unifying policy decision frame that can be applied to all application domains. This requires the Science of Governance to be abstract, so that it does not associate itself with a specific application context. It also needs to be concrete, so that all policy decisions are observable and accountable in terms of socially and physically meaningful data. The only medium to deal with this dualism is nothing but logic, more precisely, the logic of Correct by Design (CbD).

The Science of Policy Correctness

Before CbD is explained, one must understand the term “correctness.” There is a logical way to express correctness that is scale-free and domain-independent: the Venn Diagram representation of correctness in the diagram on the next page. It shows that correctness is the logical intersection of safety and liveness conditions. From a scientific viewpoint, correctness should be objectively determined according to explicitly encoded safety and liveness “social contracts”.

Safety means nothing bad happens: a system or policy is considered safe if nothing bad happens from its execution. If one plays a football game, and throughout the game, the player is not injured, the game is considered to be safe.

Liveness means something good happens during the execution of a system or policy. An example of this in a football game would be the player scoring a goal. That would be a liveness condition. The intersection between safety and liveness is clear in this example, a person is uninjured and scores a goal in the game. This is “correctness.”

This generic, domain-independent statement of correctness is not only applicable to computer science, but to governments as well. It allows policy designers to separately list the conditions of what are considered to be bad, and then list the conditions that are considered to be good. This logical decomposition of correctness is a powerful intellectual construct that enabled system engineers and computer scientists to build systems as complex as the Internet. Whether a governing body can consistently apply this construct in policy framing decides how well an organization may be governed by explicit rules. With an increasing amount of conditions, however, organizations need an additional way to ensure correctness, particularly in contracts. This is where Hoare Logic, Hoare triplets, and CbD comes in.

In 1969, when more possible conditions were being created in computer programming, Tony Hoare created a logic system to rigorously clarify system correctness. Key to this system was the Hoare Triple, expressed as {P} C {Q}. {P} is the precondition, what caused the command. C is the command, the action that takes place. {Q} is the postcondition, what happened due to the command’s occurrence. In terms of System Correctness, safety would be the precondition, the event occurring due to satisfying safeness would be the command, and liveness would be the postcondition. When C satisfied both {P} and {Q}, the system worked as planned, as in, it was Correct by Design.

In our football game example, {P} would be a person who is physically fit to play and that person does not have an intention/history to hurt other players. C would be playing the game, and {Q} would be the player scoring goals and playing at least 90 minutes. We know this example is Correct by Design if these parameters occurred accordingly. But if {Q} was the game only being played for 10 minutes and fog stopping the game, then we know the system did not attain correctness in terms of Correct by Design! CbD provides logical symmetry to all systems of any kind by using a consistent set of rules to classify the safety and liveness conditions of the system. This naming convention allows any system to identify errors (safety) and recognize accomplishment (liveness). As Henry Poicare once said: ”Mathematics is the art of giving the same name to different things.” CbD assigns consistent naming schemes (safety, liveness, and correctness) to all governance structures in casual categories.

Hoare Triples can go beyond computer programming, however, because every action made by every individual, group, government, etc. can be turned into a Hoare Triple. This includes something as simple as eating food. “Hunger” would be the pre-condition, “eating digestible food” would be the command, and “increased energy” would be the post-condition. Governance concepts like Rousseau’s Social Contract can be turned into a triplet as well. People giving power to a government would be the pre-condition, the creation of a Social Contract would be the Command, while the government receiving power would be the post-condition. These triplets can be simple or detailed, and one can even form a chain of triplets using the post-condition as the new pre-condition for a new triplet. One can easily imagine these Hoare Triples to be linked/composed to express more complex policies or programs. It is the complexity of these composable arrows/Hoare Triples that make it a domain-independent way to organize correctness in a formalized data structure. This logic system is instrumental in guiding people in a world of increasingly complex contracts.

Writing down satisfactory conditions in contracts is not new. What is new are the many possible conditions in this already highly interconnected world. Yet all these possibilities can be symmetrically dealt with through Hoare logic and concepts that formally frame correctness like CbD. Due to its composability, Hoare Triples may serve as the universal data primitive to encode arbitrary large-scale social and industrial governance challenges. Due to its simplicity, they can scaffold application scenarios that deal with the complex interactions of many knowledge domains. The Hoare Triple is a Universal Construct: It is everywhere and has been already adopted by many popular governance tools, such as the Logic Model (see Appendix on Logic Model as Multi-Level Hoare Triples).

Success Criteria:Associate social and physical meaning to Data

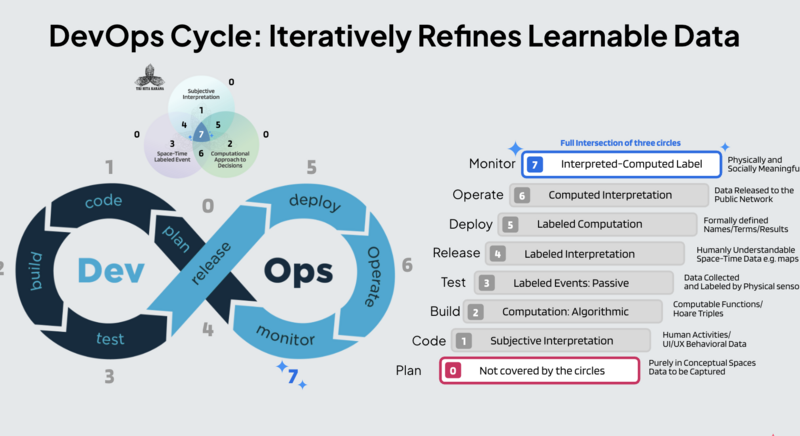

Only after recognizing that a unifying logical primitive, a Hoare Triple, is a grounding representation of scientific judgment can one see policy framing and governance practice as not just an art form, but also a scientific endeavor. It also marks a new era of Digital Transformation by actively applying Correct by Design methodology to not just engineer inanimate objects, but also use the same principles and tools to logically reason on ethical integrity. In a highly connected world, we should be allowed to adopt technologically sophisticated thinking tools to tackle the complexity created through systems driven by Big Data. However the data or causal relations of information must be associated with physical and social meaning, so that data could be relevant to governance.

As an emerging field of science SoG needs to be grounded and validated in the physical world and must be socially meaningful to people willing to use this theory. Data may be associated with physically observable parameters, such as timestamps and spatial markings, such as addresses and relative locations. To associate social meaning to data, one must engage with many socially relevant participants to agree on certain pieces of data. These are often called signed contracts. A signed contract often is dated and the integral date value is a timestamp. These increase the trustworthiness of the contract.

Symmetry breaking: Time flies like an arrow

It is time, the phenomenon that captures the unifying direction of causal relations, which breaks symmetry in our physical world. It breaks directional symmetry by forcing us into the future, never the past. Similarly, governance is about capturing opportunities in time and must use past data to inform future actions. The source of governing power comes from the advantages of having access to more relational data than the subjects being governed. Therefore, governance can be considered as a way of conscientiously exploiting information asymmetry. Without losing generality, it is defined in theoretical computing science as all data types are representable in ordered pairs[1], including “faulty” data or “failed experiments” are nothing but ordered pairs of data points, representable as Hoare Triples. According to Luo[2], computationally generated data could create novel and useful features in potential design spaces with high payoffs. Governments should innovate to explore policies in the most comprehensive design space that are practically reachable, to benefit the people, and therefore using a generic data representation such as Hoare Triple as the universal building block provides the efficacy of the design space representation.

For governmental purposes, one can see these basic data types as Logic Models that keep policy execution on track. Framing correct governance practices needs only one data type: the “ordered” relation. This singular data structure, graphically represented as arrows, an ordered pair of key-value data points, is a way to use discrete symbols to denote causal relations, a fundamental reason for the inevitable Digital Transformation. Arrows, ordered relations, and Hoare Triples are all scaffolded data structures that help humans and machines perceive time in logically computable terms. Only after causal hypotheses are given finite symbolic names in these logically represented formats, can correctness of prescribed policies become accountable, observable, and terminable in finite time. Governance is an intentional act, it must be encoded in data. There is an often ignored universal truth: all data encoding schemes can be absorbed into a set of ordering relations, as shown in diagrams composed of connected arrows. Executable programs of any kind, especially programs designed for public policy, are all made of nothing but arrows, because they are just causally bounded events over time.

Timestamping Hoare Triples with Blockchain

Time penetrates everywhere. Once a reliable time source is approved by many participants, complex workflow amongst these parties carries them across vast space. Blockchain should be used as the medium because it is an immutable ledger, being a permanent, indelible, and unalterable history of transactions. Since all blockchains must regularly package a “block” of mutual agreements in a fixed time interval, the process of packaging agreements makes blockchain both socially (many social agreements) and physically meaningful (each block denotes an increment of time).

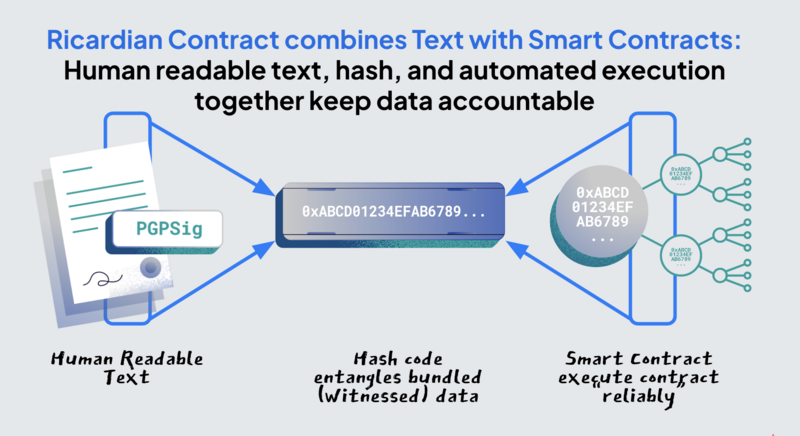

Using Blockchain as a common ledger to share actionable code, often called a Smart Contract, both pre and postconditions of a Hoare Triple can be bound to socially agreeable physical time. In other words, a trusted timestamp system defines the temporal ordering of events. It allows “commands” or “contractualized action ” to be executed in a sequence that fulfills the functions of an arbitrary and complex workflow that must be met for agreements, all denoted by Blockchain. In the physical world, the sequential order to contract execution can be easily encoded in logical assertions bound in Pre-Conditions of other Commands. The required time to fulfill the command execution, such as payment due dates after product delivery, can be encoded in Post-Conditions. These time-bound logical statements are the essential programming constructs that make up the composition of modern workflow systems, often called the Enterprise Resource Planning (ERP) system. ERP’s most essential function is to ensure all enterprise actions across highly dispersed geographical locations follow programmatically-defined temporal ordering sequences. With Blockchains and Smart Contracts providing a trustworthy global clock (via timestamps and hashes) and custom-defined action-triggering conditions, many expensive ERP software solutions could be replaced by public computing services, sometimes referred to as the Web3 movement. Hoare Triple as the Open Format Governmental policies are codes, and codes should be represented in domain-independent and scale-free data structures such as Hoare Triples. The domain-independent and scale-free nature of the Hoare Triple provides a unifying data primitive to express and examine the process of policy construction and deconstruction, hence it is not fixated in narrow fields or physical scales. The same reasoning applies to business operations and even personal event management. Coupled with a trustworthy timestamping system, a time-bound Hoare Triple is an Open Format that constructs workflow for any application, regardless of domain and scale. Open Format is an important concept in the global Digital Transformation. The integrity of digitized governmental policies must be united in a logically invariant data type, allowing any policy to be computationally examined with computable correctness. Correct by Design provides a logical framework to connect causal structures and policy statements in a common data type that is not tied to any specific interest parties. This openness in format enables a unifying semantic realm to reason about policy consequences in one logical universe. We also encourage governmental agencies to contribute their practice in a scientific community by promoting the creation of an Open Format Repository across many government agencies, so that their governance experience can be shared and reused. This repository will catalog existing security-aware communication formats, curate these designed artifacts as Ricardian Contracts, and manage the evolutionary history of the curated content as Non-Fungible Tokens (NFT). This blockchain-validated (timestamped) repository of data content composed of executable source code with textual descriptions would elevate public accessibility to the highest level possible, making Open Format knowledge reusable across application domains and shareable by various sovereignties and cultures.

Open Format for Everyone

Correct by Design enables the broadest and deepest possible interpretation of a common data format that synthesizes abstract rules with concrete results in world events administered by digitized government policies. This format should be accessible to every literate person, not just highly trained programmers. The combination of human-readable text with a specific set of executable contracts needs to have some form of technical certainty, which a Ricardian Contract fulfills. The Ricardian Contract was invented by Ian Grigg in 1996, which proposed generating a fixed-length number, called a hash code, to represent the unique composition of human readable text and machine executable contract by securing this contractual package with a hash code generating algorithm. Bitcoin uses these cryptographic codes to secure money transfer between users. Due to the uniqueness of the cryptographic key, hashes have multiple resistances to hacking, increasing the safety of the data format. The Ricardian Contract is also known as the Bowtie model because the diagram of the proposed data format looks like a bowtie.

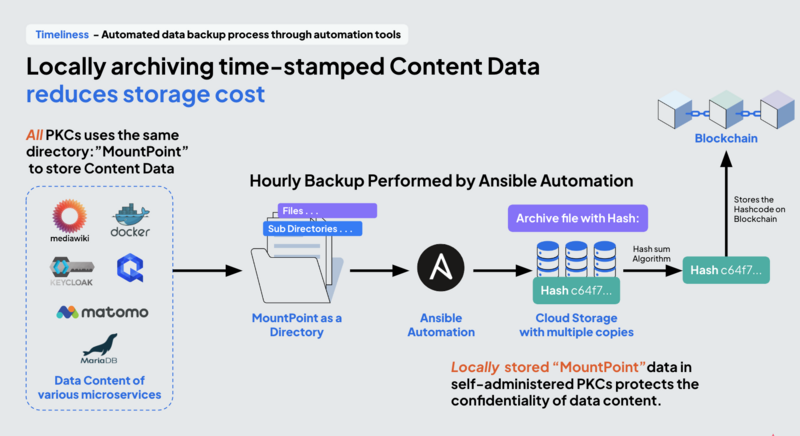

Trustworthy and Economical Data Storage

Blockchain, as an immutable database, can be expensive to operate. It is particularly expensive to store and synchronize a large amount of data across many computers. It can serve as an economically viable notary service if it is coupled with local storage systems. As proposed in the following diagram, blockchain only needs to store the hash code of a Valid Contract, while the detailed data content of the Valid Contract can be stored in local file systems of participating parties. As long as the presented data content generates the same hash code, it can be considered valid. If any modification is made to the contact package, running through the same secure hash generating algorithm again, it will guarantee to generate a different number, notifying users that the data package has been tampered.

This public infrastructure, coupled with data and computing service packaging tools like microservices and microservice orchestration, provides a new breed of data services that allow citizens to own and operate data centers like large organizations. This creates a form of scale-free data sovereignty that originally was impossible. Tools such as the Personal Knowledge Container, created in Indonesia, were designed to demonstrate the feasibility of such an egalitarian data instrument.

Summary: An End-to-End Argument on SoG

It is popularly known that large-scale internet engineering follows a so-called Hourglass Model. To allow any organization to conduct self-governance in the Internet of Everything era, it is inevitable to ask what End-to-End argument can scale to cover everything. This is reflected in multiple concepts, workflow cycles, and operational models. The answer is an Hourglass Model with a common point of control. The causal cone of Past→Present→Future and the Ricardian Contract, being visually presented as a so-called Bowtie Model, both are presented in diagrams shaped like hourglasses. Also, the DevOps cycle is often drawn in the shape of a Möbius Strip, which also looks like an hourglass. These recurrences of hourglasses are not coincidences.

There is a reason that all these concepts, cycles, and models must have a common point of control. The action of control may only happen at “the present (time).” One does not “live in the past,” after all. “The present” is often short and locally small, dealing with a very large world of possibilities. Therefore, all decisions that have big impacts must condense from a significant amount of past events and expand to a wide range of future possibilities. That is why all system design patterns are eventually shaped like hourglasses. Some of them are oriented in different directions (horizontal vs. vertical), the general shape being wide on both ends and skinny in the middle. The widening and narrowing structure of an hourglass shows that data can be compressed and shaped asymmetrically. It is this asymmetry that gives utility to practical applications, and the practicality of data manipulation introduces accountable ways to deal with governance issues. It is also why the hourglass has been recognized as a geometrical pattern to show controllability in various literature. Interestingly enough, this shape also is a device that measures time.

Leverage Information Asymmetry for Self-Governance

We argue that information asymmetry exists in nature and cannot be avoided. But they can be technologically distributed by allowing more people a monopoly on their own information. By utilizing computing resources that many people of the world already privately own, individuals can obtain information in timely, accountable, and observable manners according to their interests. Alternatively, people could create information asymmetry based on their private data assets, thereby creating mutual dependency that reduces systems favoring people with more money or more computational knowledge. The people who can use the SoG are not just ones with powerful computers or high bandwidth network connections. SoG changes the power structure by helping more people know how data and causal structures can be used to create information asymmetry in their subjects of interest. Moreover, the successful ones must have timely, accountable, and observable instruments to process application specific data to adequately govern their own organizations. This is where a free and unbiased technical instrument must be created and adopted to enable correctness in governance. The creation of such a technical instrument is about pragmatic data engineering, not just theoretical science, which will not be discussed here.

SoG evolves with the technology of data manipulation

An automated process to identify causal entanglement of data over spacetime would be the ultimate crystal ball for governed outcomes. According to Han Feng, physicists such as Kofler and Zeilinger already explained the boundary of predictive powers in quantum physical terms. With new waves of the Internet of Things technologies and higher bandwidth communication infrastructures, the casual entanglement of data over spacetime still has many surprises. Because the Science of Governance is scale-free and domain-independent, abstract structures like the Logic Model, Ricardian Contract, and the Hoare Triple will continue to be employed in future scientific endeavors. Independent of the size of the organization, ordered pairs of instructions (Logic Model/Hoare Triple) are the necessary knowledge to enable self-governance that should be treasured by anyone who possesses private valuable data.

Tools: Personal Knowledge Container (PKC)

Tech for Trust: A Data Infrastructure for Trust Building

The Personal Knowledge Container (PKC) is both a scalable personal library and a data wallet, a self-administered knowledge management solution that addresses the problems caused by information asymmetry as defined by the Science of Governance (SoG). PKC is a Domain-Driven Microservice to avoid a monolith application, reducing unnecessary entanglement of functionality in a modular way. It can transfer a large amount of digital rights at little cost. PKC was created to show how technically feasible and economically viable it is to enable individuals and small organizations to process data in a timely, accountable, and observable manner in ways that are similar or equivalent to systems only affordable by large-scale organizations. This means that individuals can process a potentially infinite amount of data. Large-scale organizations would also benefit from PKC as they would save large costs of transferring terabytes of data by simply using the tool. It can be trusted as it is open, transparent, and most importantly, operated and owned by people who generated the data from the source. PKC chooses currently known technologies that allow data providers to contain the right to govern at the origin of data, so that its technical architecture is trustworthy.

PKC is a technical solution that addresses the political problem of data ownership. By making data processing technologies available to the masses through Open-sourced and freely distributed PKCs, this instrument should help reduce technologically and economically induced information asymmetry, and therefore build trust amongst society participants.

As Blockchain and Smart Contract related data infrastructures become increasingly mature, the features of a geographically-dispersed collaborative workflow as promised by the “Web3.0” programming model has already been incorporated into PKC.

The increasing adoption of digital payment systems and publicly registered Data Assets, commonly known as Non-Fungible Tokens (NFTs), shows that Internet-scaled marketplaces could be designed and deployed by grassroot startups. Since the public deployment of Ethereum in 2015 as a “programmable blockchain,” many of the highly publicized online economic events have been conducted by rules encoded in machine executable contracts. This also gave birth to the field of Cryptoeconomics, an area of digitally transformed economic activities that are usually associated with Decentralized Finance (DeFi) applications. The lack of an Internet-scale regulatory framework to govern these economic activities is another kind of information asymmetry that favors communities with better access to data processing technologies. By bundling Blockchain-compatible services and Smart Contract deployment capabilities in PKCs, the container reduces this unfair advantage. PKC as a general-purpose data and computing service container also allows more people to participate in these online marketplaces with a wider range of asset classes. To contextualize the design intent, it is not just a specific data manipulation tool designed for Information Technology professionals, it is a stack of governance technology that addresses the evolving needs of large-scale online interactions.

Processes and Resources: Manipulate data assets in Open Formats

To ensure trustworthiness, the source code of PKC should not only be Open-sourced; it is even more important to be compliant to open and exchangeable formats. Open-source technology is usually created by other entities, so when mistakes in the software happen, the person would have a harder time identifying what exactly to fix. Open-format solves this issue as one can directly see the codes themselves. The following diagram shows the Open-source solutions adopted as the key functional elements of PKC.

A format is open if the encoding structure and the metadata regarding the structure is transparent and well-documented. One such technical effort to ensure openness is called Open API, originally the Swagger API project. It forces all computing services to produce results in JSON formats (human readable), offering a set of web-based graphical user interfaces to expose the metadata and allow data extraction and data submission to be operable through standard network requests. PKC adopts Open API protocol by exposing data content in the MediaWiki database. The other services such as Keycloak and QuantUX already have native implementations of Open API.

Connect Web3 into the Cloud Native Industry

Distributing these technology building blocks is not a new idea. It has been a massive social and economic movement in the making for at least 30+ years. The most relevant technologies include software container technologies such as Docker, container orchestration technologies such as Kubernetes, and OpenShift Framework for a standardized Continuous Integration and Continuous Delivery (CI/CD) tool chain. These software integration efforts are not only free, but they have abundant tutorials and community practitioners that can help create fully functional distributed data centers with near-zero software development cost. In terms of hardware infrastructure, PKC is designed to reduce the cost of data ownership by using commodity hardware solutions to enable remote and low-cost communication services, such as Wifi Mesh, and 3~5G access points. By localizing personal data storage, one may choose to only store the hash code of a large data set to Blockchain. This “layered” solution of data storage would retain a mechanism to verify data content that must be tamper-free, while not having to incur massive costs of data storage on public blockchain.

PKC can also locally serve computing services with containerized microservices, therefore reducing large amounts of unnecessary network traffic across the Internet and network operations costs. Docker containerization technology and virtualization enable us to see software package functionality as an instrument, rather than a bunch of code, thus enabling us to achieve our goals more concisely and consistently, allowing us to see the full scalability of the instrument.

It is the collection of all these existing Open-source technologies and commoditized hardware solutions that enabled the possible mass adoption of PKC. This container is a technology stack for trust-building that empowers individuals and agencies to exercise data sovereignty in affordable ways. For example, when one needs to share a file with many potential downloaders, a solution is to store it in the IPFS format and naming scheme, so that it can be addressed across the Internet based on its digital content. PKC can include an IPFS service node as one of its Dockerized services.

NFT: Transferring Governance Rights

Data formats also need to support exchangeability. A piece of data should be exchangeable in the marketplace by having a common set of annotation to denote its ownership and protect its content to only be editable by its owner. This can be accomplished by publishing/minting data assets as Non-Fungible Tokens (NFTs). Without going into the technical details of minting NFTs, the main obstacle of minting them is that public NFT minting services all require upfront cryptocurrency payments. The good news is that there are public minting and publishing services that require extremely low fees. NFTs, as a special kind of securely protected data content, allows for many kinds of workflow security programming that was not feasible before. For example, one may transfer the ownership of certain rights, which serves as the condition to modify or authorize information in a workflow, so that decision-making accountability can be programmatically controlled using an NFT ownership transfer. This is a critical feature in managing the governance of many organizations.

Content filtering independent of outside influence

An important feature of PKC is the ability to filter data content based on self-operated content filters. Controlling the filters is not only useful for preventing children from seeing age-inappropriate content, it can also be used to actively filter and prioritize content beneficial to social, intellectual, and economic activities. Running a privately owned knowledge container such as PKC will enable individuals to search, organize, and store data content in ways that can be isolated from data surveillance technologies. Without a self-administered knowledge container, online content consumption is inevitably influenced by external parties, which is not desirable: the ability to independently configure data filtering options with minimal external influence is an aspect of freedom to modern citizens. PKC serving the data filtering functions based on privately controlled computing services also enables a type of law enforcement possibility that does not intrude on individual privacy.

Desirable Outcome: Egalitarian access to Data Processing Technologies

PKC implementation can be successful when it satisfies the following conditions: It enables owners of PKC to collect, catalog, navigate, search, and process data assets using computing technologies that have been released to the general public as Open-source and Open Format solutions. All chosen technical solutions should be affordable to everyone. It must store the change history of data assets by registering changes using various levels of technically secured (cryptographically verifiable) procedures, so that data assets can be traced to know at what time and by whom did the collection of data change. Therefore, it becomes technically possible to assign accountability, observe, and change outcomes with timely remedies. It must encode knowledge in a Correct by Design (CbD) format, which should fit in logic models composed of human-readable text and relevant executable code. The operational experience accumulated from the execution of each logic model should also be recorded by PKC to provide feedback for future refinement of the logic model.

It is only after a wide enough population started to have egalitarian access to data processing technology similar to large tech companies can the societal issue of information asymmetry be systematically addressed. PKC is a technical solution to address this concern as prescribed by the Science of Governance.

Applications: Concrete SoG Examples

Selected Applications: Trans-disciplined SoG Examples

Proposed Actions after G20 in 2022

Scientific Governance in Action

Conclusion

Acknowledgements

Works Cited

Appendix

- ↑ Scott, Dana (January 1, 1970). "Outline of a Mathematical Theory of Computation". local page: Oxford University Computing Laboratory Programming Research Group.

- ↑ Luo, Jianxi (Jan 19, 2022). Data-Driven Innovation: What is it? (PDF). local page: IEEE TRANSACTIONS ON ENGINEERING MANAGEMENT.