Getting Started on Amazon EKS

Background

Target of this documentation is to enable implementation of Kubernetes Cluster in Amazon Web Service [AWS], as the base kubernetes implementation to deploy PKC Application. All the documentation is sourced from AWS Documentation and other source that will be mentions at the bottom of the page.

Pre-Requisite

Below are the pre-requisite that must be done prior to start to follow the documentation

1 Preparation Step

- kubectl; A command line tool for working with Kubernetes clusters. This guide requires that you use version 1.21 or later. To install kubectl, please follow this link AWS - How to Install Kubectl

- eksctl; A command line tool for working with EKS clusters that automates many individual tasks. This guide requires that you use version 0.61.0 or later. To install or upgrade eksctl, please follow this link - Install or upgrade eksctl

- Required IAM Permission; The IAM security principal that you're using must have permissions to work with Amazon EKS IAM roles and service linked roles, AWS CloudFormation, and a VPC and related resources. You must complete all steps in this guide as the same AWS user. To Setup IAM Permission, enables communication between AWS infrastructure and Kubernetes Cluster, please follow this link -- Getting started with IAM

2 Create Cluster Control Plane

- Choose cluster name and version of kubernetes

- Choose Region and VPC Cluster for the cluster

- Set security for cluster

3 Create Worker Node and Connect to cluster

- Create Node Group

- Choose which cluster to attach to

- Define security group, select instance type, resources.

All of the above items are neccessary complexity to compensate the amount to computing resource that we can defined within AWS, however, there are other way to simplify abovemention process, by using command line tools eksctl, please follow this link for github repository of it.-- Git Hub of eksctl

eksctl will provide automation to cover point number two and three by using only one single command line, however, granular parameters and configuration also provided, so that one can have better control of cluster creation.

Starting at this point, this documentation will guide readers to accomplished cluster creation in AWS using eksctl.

eksctl Installation

Below are the command line for macOS machine to install eksctl. Which we are going to use homebrew package manager, for users that need guide to install homebrew, please follow this link. -- Homebrew

brew tap weaveworks/tap

Tap, means to add third-party repository to homebrew. By issuing above command, homebrew will automatically update repositories, after the process is finished, continue to install eksctl

brew install weaveworks/tap/eksctl

Homebrew will automatically install all dependencies needed to run eksctl. Once done, try to check the installation version of eksctl.

eksctl version 0.61.0

At the time this document is written, the version of eksctl is 0.61.0

In the event to use eksctl, please ensure that you are using the most current version of eksctl. On how to update, it is depend on how the installation is performed.

AWS CLI Installation

eksctl is communicating with AWS using API, in which the users need to authenticate with AWS. To enable the authentication process, we need to install AWS CLI. on MacOS machine, below are the command line can be use to install AWS CLI.

curl "https://awscli.amazonaws.com/AWSCLIV2.pkg" -o "AWSCLIV2.pkg" sudo installer -pkg AWSCLIV2.pkg -target /

Above command will install AWS CLI version 2 on all users on the machine, and also please noted that you are going to need sudo permission. Please follow this link for complete installation procedure -- Install AWS CLI. This document is written by using AWS CLI version 2. Check installation result

aws --version aws-cli/2.2.29 Python/3.8.8 Darwin/18.7.0 exe/x86_64 prompt/off

Authenticate your machine

To enable communication between AWS and your machine, you need to create AWS authentication and authenticate your machine. Below is the process on how to crate authentication and authenticate your machine to connect to AWS. Our target of this process is to provision user Access Key ID and Access Key secret to be use in aws command line.

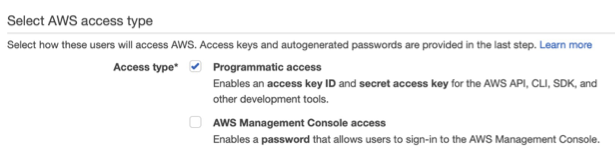

First, you will need administrator AWS account to enable the user creation. Go to IAM Dashboard then click Users on left hand side menu. On IAM > Users Screen, you can click Add users button or use existing user. On create user process, you will need to open Administrator Policy, which will enable eksctl to function properly. There are five step needs to be fulfilled to create new user. You might want to enable only the programmatic access type

Once the user is created, you can proceed to create Access Key on Security Credentials tabs on User Page. The screen will looks something like left picture.

Please save the Access Key ID and Access Key Secret. If you lose them, you cannot re-display the Secret Key. So you will to re-create another Access Key. Once you have successfully provision the Acces Key ID and Access Key Secret. Continue to local machine terminal and run below command

aws configure

Then, aws will ask user to input the Access Key Secret and Access Key ID. aws CLI will create new file in ~/.aws/credentials to store the credentials information.

eksctl command

The very basic command of eksctl are as follow

eksctl create cluster

It will create all the necessary system object listed at the beginning of this document using all default values. You can try to run and see the result an process. To delete all the system object and cleanup, use this command

eksctl delete cluster [cluster-name]

This command will clean up all the related system object. For our use-case, below are the minimum parameters that needs to be passed into eksctl to customize the cluster.

eksctl create cluster \

--name pkc-cluster \

--version 1.20 \

--region us-west-2 \

--nodegroup-name pkc-node \

--node-type t2.medium \

--nodes 2 \

--node-volume-size=30 \

--ssh-access \

--enable-ssmBelow are the explanation of each parameters

- name; the cluster name, you can type your chosen cluster name here

- version; the version of kubernetes that will be implemented on the cluster

- region; the region of AWS which the cluster will be created, please refer to AWS Console to see the list.

- nodegroup-name; the name of the node group that will be assigned into the worker node

- node-type; the machine type, please refer to AWS instance type and availability on each zone. Please refer to this link.

- node-volume-size; the size of the storage on each worker nodes, default of not supplied is 80GB of space.

- --ssh-access;if supplied, the worker node will open SSH port and will look into ~/.ssh/id_rsa.pub as public key. Use id_rsa as your private key to access worker node.

- --enable-ssm; to enable access for AWS SSM Agent. This will refer to monitoring process in AWS feature.

Further explanation of the parameter can be read on this link. Below are truncated sample output of this command.

2021-08-15 14:33:01 [ℹ] eksctl version 0.61.0

2021-08-15 14:33:01 [ℹ] using region us-west-2

2021-08-15 14:33:03 [ℹ] setting availability zones to [us-west-2b us-west-2d us-west-2a]

2021-08-15 14:33:03 [ℹ] subnets for us-west-2b - public:192.168.0.0/19 private:192.168.96.0/19

2021-08-15 14:33:03 [ℹ] subnets for us-west-2d - public:192.168.32.0/19 private:192.168.128.0/19

2021-08-15 14:33:03 [ℹ] subnets for us-west-2a - public:192.168.64.0/19 private:192.168.160.0/19

2021-08-15 14:33:03 [ℹ] nodegroup "pkc-node" will use "" [AmazonLinux2/1.20]

...

2021-08-15 14:56:16 [✔] EKS cluster "pkc-cluster" in "us-west-2" region is readyCheck the result of the cluster using below command

kubectl get nodes

NAME STATUS ROLES AGE VERSION

ip-192-168-30-224.us-west-2.compute.internal Ready <none> 9h v1.20.4-eks-6b7464

ip-192-168-72-61.us-west-2.compute.internal Ready <none> 9h v1.20.4-eks-6b7464To generate complete JSON description of the cluster and saves it to cluster-doc.json, issue below command

eksctl utils describe-stacks --region=us-west-2 --cluster=pkc-cluster

Node-type parameter, can be listed by below command to list all of node-type available on your selected region.

aws ec2 describe-instance-type-offerings --location-type availability-zone --filters Name=instance-type,Values=t2.medium --region us-west-2 --output table

Output

-------------------------------------------------------

| DescribeInstanceTypeOfferings |

+-----------------------------------------------------+

|| InstanceTypeOfferings ||

|+--------------+--------------+---------------------+|

|| InstanceType | Location | LocationType ||

|+--------------+--------------+---------------------+|

|| t2.medium | us-west-2a | availability-zone ||

|| t2.medium | us-west-2c | availability-zone ||

|| t2.medium | us-west-2b | availability-zone ||

|+--------------+--------------+---------------------+|Clean Up

Once everything isc checked and running, you can execute below command to cleanup and delete resources that has been created by this tutorial document.

eksctl delete cluster <cluster-name> eksctl delete cluster pkc-cluster

Output

2021-08-18 02:49:25 [ℹ] eksctl version 0.61.0

2021-08-18 02:49:25 [ℹ] using region us-west-2

2021-08-18 02:49:25 [ℹ] deleting EKS cluster "pkc-cluster"

2021-08-18 02:49:29 [ℹ] deleted 0 Fargate profile(s)

2021-08-18 02:49:32 [✔] kubeconfig has been updated

2021-08-18 02:49:32 [ℹ] cleaning up AWS load balancers created by Kubernetes objects of Kind Service or Ingress

2021-08-18 02:50:20 [ℹ] 3 sequential tasks: { delete nodegroup "pkc-node", 2 sequential sub-tasks: { 2 sequential sub-tasks: { delete IAM role for serviceaccount "kube-system/alb-ingress-controller", delete serviceaccount "kube-system/alb-ingress-controller" }, delete IAM OIDC provider }, delete cluster control plane "pkc-cluster" [async] }

...

2021-08-18 02:54:26 [ℹ] will delete stack "eksctl-pkc-cluster-addon-iamserviceaccount-kube-system-alb-ingress-controller"

2021-08-18 02:54:26 [ℹ] waiting for stack "eksctl-pkc-cluster-addon-iamserviceaccount-kube-system-alb-ingress-controller" to get deleted

2021-08-18 02:54:26 [ℹ] waiting for CloudFormation stack "eksctl-pkc-cluster-addon-iamserviceaccount-kube-system-alb-ingress-controller"

2021-08-18 02:54:46 [ℹ] deleted serviceaccount "kube-system/alb-ingress-controller"

2021-08-18 02:54:48 [ℹ] will delete stack "eksctl-pkc-cluster-cluster"

2021-08-18 02:54:48 [✔] all cluster resources were deletedConclusion

The eksctl has given so much simplicity to create kubernetes cluster in AWS, primarily on subnet, security, and authentication procedure. However, above procedure is still complicated in general. And it hides many things on the configuration perspective. It is highly recommended that one explore each and every aspect that is automated by eksctl to have complete and better understanding on how AWS manage the cluster and to enabled us taken more control over the solution.

After this documentation, the next things to do on the other end is how to expose our API or our application to the internet, in which also a bit challenging process. Which are Ingress Controller, Concept, and how it works.